AI Therapy: A New Form Of Surveillance In A Police State?

Table of Contents

The Allure of AI Therapy: Accessibility and Efficiency

AI therapy presents a compelling vision for the future of mental healthcare. Its proponents highlight several key advantages that could revolutionize access to treatment.

Cost-Effectiveness and Scalability

AI therapy offers the potential to significantly reduce the cost barrier to mental healthcare. Traditional therapy can be prohibitively expensive, leaving many individuals, particularly those in underserved communities, without access to crucial support.

- Lower costs: AI therapy platforms can significantly reduce costs associated with therapist salaries and office overhead.

- Wider reach: AI-powered tools can provide access to mental healthcare in remote areas lacking sufficient mental health professionals.

- 24/7 availability: Unlike human therapists, AI platforms offer continuous support, providing immediate assistance when needed. This is especially crucial for individuals experiencing crises.

Personalized Treatment Plans

AI algorithms can analyze vast amounts of data to create personalized treatment plans tailored to individual needs and preferences. This personalized approach has the potential to significantly improve treatment outcomes.

- Data-driven insights: AI can analyze patient data, including symptoms, history, and responses to treatment, to identify patterns and tailor interventions.

- Adaptive algorithms: These algorithms can adjust treatment plans in real-time based on patient progress and feedback, ensuring optimal effectiveness.

- Potential for faster progress: Personalized treatment, combined with readily available support, could lead to faster progress and improved outcomes compared to traditional methods.

Data Collection and Privacy Concerns

The very features that make AI therapy appealing—its data-driven approach and personalized treatment—also raise serious privacy concerns. The extensive collection of personal data inherent in these platforms poses significant risks.

- Storage security vulnerabilities: The sensitive nature of mental health data makes it a prime target for cyberattacks and data breaches. Robust security measures are essential.

- Potential for data breaches: Even with robust security, the risk of data breaches remains, with potentially devastating consequences for patients.

- Lack of clear regulations: The current lack of comprehensive data privacy regulations specifically addressing AI therapy creates a significant vulnerability.

The Surveillance State: AI Therapy as a Tool of Control

The potential for AI therapy to be misused as a tool of surveillance and control in a police state is a significant concern. The vast amount of personal data collected by these platforms could be exploited to monitor and manipulate individuals.

Data Mining for Predictive Policing

Law enforcement agencies might attempt to mine AI therapy data to identify individuals deemed "at risk" of committing crimes, potentially leading to preemptive arrests and surveillance.

- Correlation of mental health data with criminal behavior: The potential for misinterpreting correlations between mental health conditions and criminal behavior is a major concern.

- Biased algorithms: Algorithms trained on biased data could perpetuate and amplify existing societal biases, leading to unfair targeting of specific groups.

- Potential for false positives: The inherent uncertainty in predicting criminal behavior based on mental health data could lead to a high rate of false positives, resulting in unnecessary surveillance and harassment.

Monitoring and Control of Dissent

AI therapy platforms could be used to identify and suppress political dissent or opposition by analyzing speech patterns and emotional responses.

- Analysis of speech patterns and emotional responses: AI can analyze language used in therapy sessions to identify individuals expressing dissenting views.

- Flagging individuals for "re-education" or surveillance: Individuals identified as expressing dissent could be subjected to increased surveillance or forced "re-education" programs.

- Erosion of free speech: The potential for such misuse poses a severe threat to freedom of speech and expression.

Lack of Transparency and Accountability

The lack of transparency and accountability within the AI therapy industry exacerbates the risk of abuse. Without clear guidelines and oversight, the potential for manipulation is significant.

- Absence of standardized ethical guidelines: The absence of universally accepted ethical guidelines for the development and use of AI therapy creates a regulatory vacuum.

- Difficulty in holding developers accountable: Determining responsibility for AI-driven harm is complex, making it challenging to hold developers accountable.

- Potential for manipulation: The lack of oversight allows for the potential for manipulation of algorithms and data to achieve specific political or social goals.

Mitigating the Risks: Ethical Frameworks and Regulations

To prevent the misuse of AI therapy and ensure its ethical development and deployment, robust regulatory frameworks and ethical guidelines are crucial.

Data Privacy Legislation

Strong data protection laws specifically addressing AI therapy data are essential to safeguard patient privacy and prevent misuse.

- Data anonymization: Techniques to remove or obscure identifying information from data sets are crucial.

- Informed consent: Patients must be fully informed about how their data will be used and have the right to withdraw consent at any time.

- User control over data: Individuals should have control over their data and be able to access, correct, or delete it as needed.

Algorithmic Transparency and Bias Mitigation

Transparency and bias mitigation are crucial to ensure fairness and prevent discriminatory outcomes.

- Regular audits: Independent audits of algorithms to ensure fairness and accuracy are essential.

- Independent verification: Third-party verification of algorithms can help to build trust and confidence.

- Diverse development teams: Diverse teams are more likely to identify and mitigate biases in algorithms.

Human Oversight and Ethical Review Boards

The involvement of mental health professionals and ethicists in the development and deployment of AI therapy is critical to ensure ethical considerations are prioritized.

- Ethical guidelines for data usage: Clear guidelines on data collection, storage, and use are crucial.

- Monitoring of AI performance: Continuous monitoring to ensure that AI systems are functioning ethically and effectively is necessary.

- Mechanisms for reporting abuses: Clear mechanisms for reporting potential abuses and ensuring accountability are needed.

Conclusion

AI therapy holds immense potential to improve access to mental healthcare and personalize treatment. However, the risk of its misuse as a tool of surveillance in a police state is a significant and concerning possibility. The vast amounts of sensitive personal data collected by these platforms could be exploited for purposes far removed from therapeutic care. The future of AI therapy hinges on our collective commitment to prioritize ethical considerations and robust regulations. Let's ensure that the promise of improved mental health access is not overshadowed by the spectre of a surveillance state. Demand ethical AI therapy!

Featured Posts

-

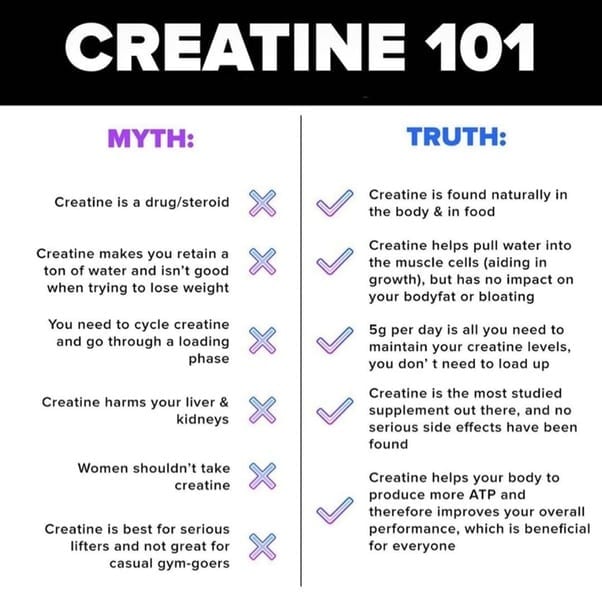

Is Creatine Safe And Effective A Detailed Look

May 16, 2025

Is Creatine Safe And Effective A Detailed Look

May 16, 2025 -

Le Repechage De La Lnh Hors De Montreal Succes Ou Echec

May 16, 2025

Le Repechage De La Lnh Hors De Montreal Succes Ou Echec

May 16, 2025 -

Paddy Pimbletts Road To Ufc 314 Champion In The Making

May 16, 2025

Paddy Pimbletts Road To Ufc 314 Champion In The Making

May 16, 2025 -

Nouveautes Ge Force Now 21 Jeux Integrent Le Catalogue

May 16, 2025

Nouveautes Ge Force Now 21 Jeux Integrent Le Catalogue

May 16, 2025 -

Jalen Brunson Injury Update Playing Sunday After One Month Absence

May 16, 2025

Jalen Brunson Injury Update Playing Sunday After One Month Absence

May 16, 2025