AI's Learning Limitations: Promoting Ethical And Responsible AI Development

Table of Contents

Data Bias and its Impact on AI Learning

AI systems learn from data, and if that data reflects existing societal biases, the resulting AI will perpetuate and even amplify those biases. This phenomenon, known as algorithmic bias, is a significant challenge in achieving fairness in AI. Data bias mitigation is crucial to creating equitable AI systems. For example:

- Facial recognition systems have demonstrated bias against people with darker skin tones, leading to misidentification and potential miscarriages of justice.

- Loan application algorithms have been shown to discriminate against certain demographic groups, perpetuating financial inequality.

Addressing data bias requires a multifaceted approach:

- Data augmentation: Supplementing existing datasets with more representative data can help balance the scales.

- Careful data curation and preprocessing: Identifying and correcting biases in the data before training the AI model is essential.

- Algorithmic fairness techniques: Employing algorithms specifically designed to mitigate bias during the training process.

- Regular audits and monitoring: Continuously evaluating AI systems for bias and adjusting accordingly is vital to maintaining fairness.

The Problem of Explainability in AI (Explainable AI or XAI)

Many AI systems, particularly deep learning models, are often referred to as "black box" systems. Their internal decision-making processes are opaque, making it difficult to understand why an AI arrived at a particular conclusion. This lack of transparency poses a significant challenge to Explainable AI (XAI) and interpretable AI. The importance of explainability cannot be overstated:

- Trust: Users are more likely to trust an AI system if they understand how it works.

- Accountability: Explainability is crucial for determining responsibility when an AI makes an incorrect or harmful decision.

- Debugging: Understanding the decision-making process allows developers to identify and correct errors.

Improving explainability requires several strategies:

- Developing more transparent algorithms: Designing algorithms that inherently reveal their reasoning.

- Employing visualization techniques: Using visual tools to represent the AI's decision-making process.

- Using methods like LIME or SHAP: These techniques provide explanations for individual predictions by approximating the model's behavior locally.

Generalization and Robustness Challenges in AI

AI systems are often trained on specific datasets and may struggle to generalize their knowledge to new, unseen data or real-world scenarios. This limitation leads to vulnerability, particularly regarding generalization in AI and robust AI development. The challenges include:

- Difficulty in handling out-of-distribution data: AI systems may perform poorly when presented with data that differs significantly from their training data.

- Vulnerability to adversarial attacks: Small, carefully crafted perturbations to input data can cause AI systems to make incorrect predictions.

Improving generalization and robustness necessitates:

- Data augmentation with diverse and realistic scenarios: Training AI models on data that reflects the variability of real-world situations.

- Adversarial training methods: Training AI models to be resilient to adversarial attacks.

- Robust optimization techniques: Employing optimization methods that enhance the stability and generalizability of AI models.

The Ethical Implications of AI's Learning Limitations

The limitations of AI discussed above have profound ethical implications. Biased or unreliable AI systems can lead to:

- Discrimination and inequality: Perpetuating and amplifying existing societal biases.

- Erosion of trust: Undermining public confidence in AI systems and technology.

- Unintended consequences: Leading to unforeseen and potentially harmful outcomes.

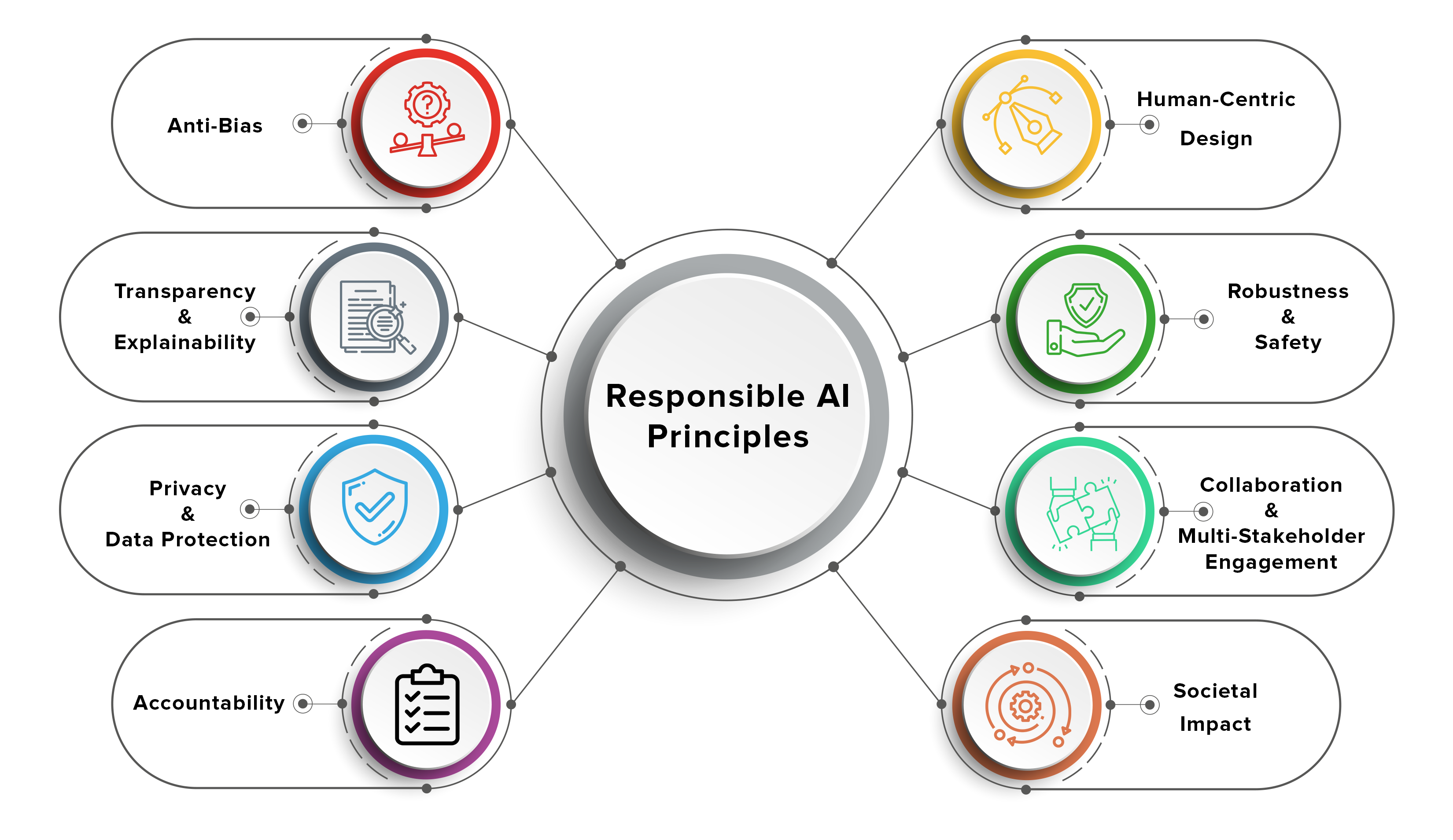

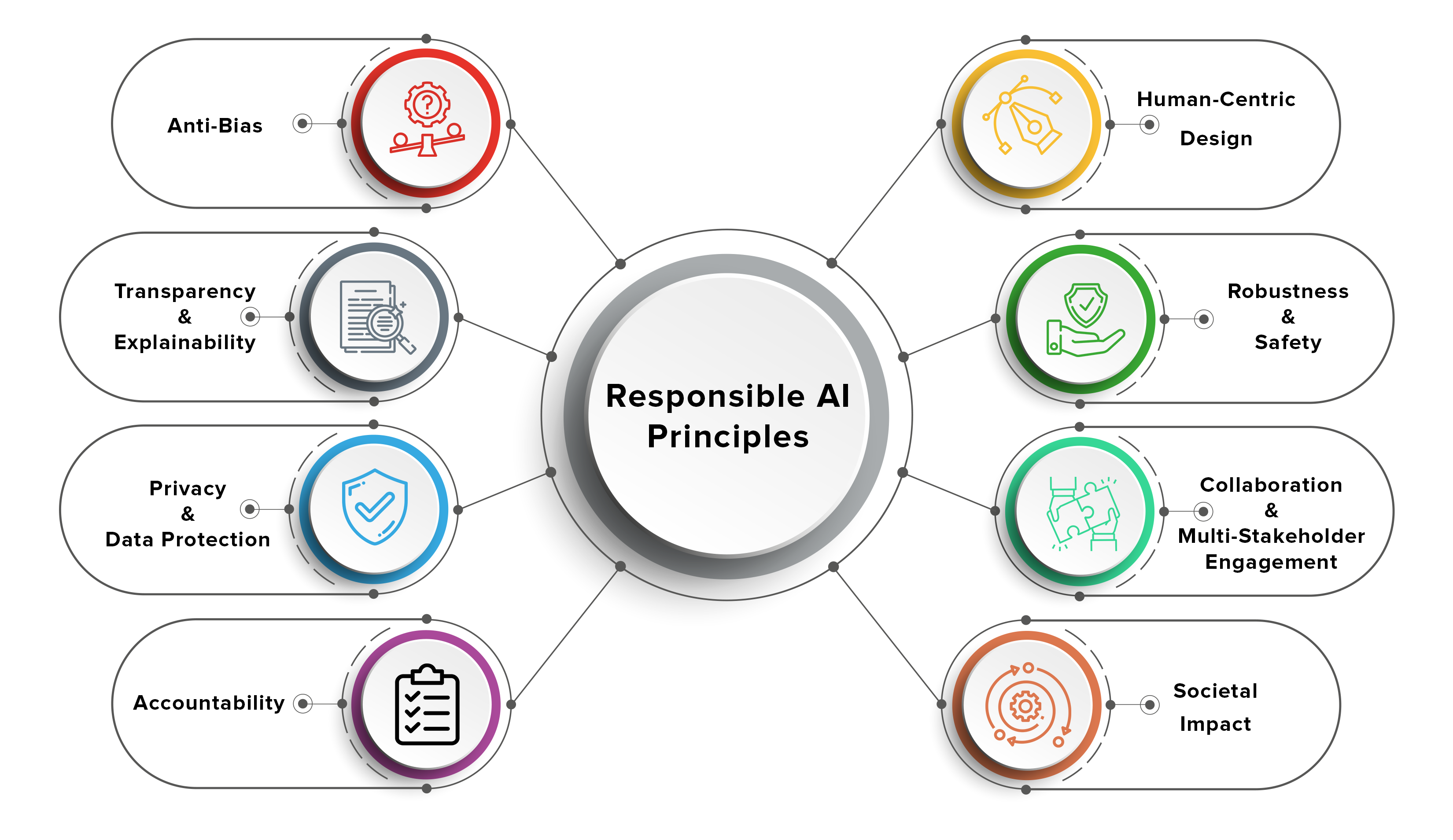

Addressing these ethical challenges requires:

- Human oversight: Ensuring human control and accountability in AI development and deployment.

- Ethical guidelines: Developing and adhering to ethical guidelines for AI research, development, and use.

- Collaboration: Fostering collaboration between researchers, policymakers, the public, and industry stakeholders to address AI ethics concerns.

- Legal and regulatory frameworks: Establishing appropriate legal and regulatory mechanisms to govern the development and use of AI.

Conclusion: Building a Future with Responsible AI Development by Addressing AI's Learning Limitations

This article has highlighted key limitations of AI learning: data bias, explainability challenges, generalization issues, and significant ethical implications. Addressing these limitations is not merely a technical challenge but a moral imperative. Creating ethical and responsible AI systems requires a concerted effort from all stakeholders. We must prioritize mitigating AI biases, fostering transparency in AI, and ensuring the robustness of AI systems. By engaging in further research on responsible AI development, participating in discussions on AI ethics, and advocating for policies that promote the ethical use of AI, we can build a future where AI benefits all of humanity. Let's work together to overcome AI's learning limitations and shape a responsible AI future.

Featured Posts

-

Glastonbury 2025 Resale Tickets Timing And How To Secure Yours

May 31, 2025

Glastonbury 2025 Resale Tickets Timing And How To Secure Yours

May 31, 2025 -

Tigers Drop First Home Series Bats Silent Against Rangers

May 31, 2025

Tigers Drop First Home Series Bats Silent Against Rangers

May 31, 2025 -

Sanofi Les Salaries D Amilly Luttent Contre La Vente De L Usine D Aspegic

May 31, 2025

Sanofi Les Salaries D Amilly Luttent Contre La Vente De L Usine D Aspegic

May 31, 2025 -

Crisis In The Housing Market A Deep Dive Into Plummeting Home Sales

May 31, 2025

Crisis In The Housing Market A Deep Dive Into Plummeting Home Sales

May 31, 2025 -

Croque Monsieur Casero Receta Simple Y Detallada Paso A Paso

May 31, 2025

Croque Monsieur Casero Receta Simple Y Detallada Paso A Paso

May 31, 2025