Algorithms And Mass Shootings: Holding Tech Companies Accountable

Table of Contents

Every year, mass shootings claim countless lives, leaving communities devastated and prompting urgent calls for solutions. The role of technology in these tragedies is increasingly undeniable. Algorithms and mass shootings are increasingly intertwined, raising critical questions about the responsibility of tech companies. This article will explore the ways algorithms contribute to the spread of extremist ideologies and violence, arguing for increased accountability from tech companies.

H2: The Role of Social Media Algorithms in Radicalization

Social media algorithms, designed to maximize user engagement, inadvertently create environments conducive to radicalization. These algorithms prioritize content that elicits strong emotional responses, often favoring sensationalist and extremist viewpoints.

H3: Echo Chambers and Filter Bubbles:

Algorithms create echo chambers and filter bubbles, reinforcing pre-existing beliefs and limiting exposure to diverse perspectives. This phenomenon isolates individuals within communities of like-minded individuals, often leading to the amplification of extremist ideologies and a decreased tolerance for dissenting opinions.

- Examples: Facebook's News Feed algorithm, YouTube's recommendation system, and Twitter's trending topics all contribute to the creation of echo chambers. These platforms prioritize engagement metrics, often prioritizing the spread of emotionally charged content, even if that content is harmful.

- Studies show a significant correlation between increased time spent on social media consuming extremist content and the likelihood of radicalization. The lack of exposure to counter-narratives further exacerbates this effect.

- The growth of extremist groups online, facilitated by algorithm-driven recommendations, has been alarming. Research indicates a significant increase in online recruitment and the spread of violent propaganda through these platforms.

H3: Targeted Advertising and Propaganda:

Sophisticated algorithms allow extremist groups to target vulnerable individuals with precisely tailored propaganda and recruitment materials. This targeted dissemination bypasses traditional media gatekeepers, making it significantly more effective.

- Examples include targeted Facebook ads promoting extremist ideologies or YouTube videos promoting conspiracy theories reaching individuals based on their browsing history and online activity. The lack of transparency in ad targeting makes it difficult to track and regulate this type of activity.

- The use of AI in creating and disseminating propaganda is a growing concern. AI-powered tools can generate realistic-looking videos and images, making it more challenging to identify and counter disinformation campaigns.

- The ability to micro-target individuals based on their demographics, interests, and online behavior makes it easier for extremist groups to identify and recruit potential members.

H2: The Failure of Content Moderation Systems

Despite efforts to mitigate the spread of harmful content, current content moderation strategies employed by tech companies are demonstrably insufficient.

H3: Insufficient Oversight and Resources:

The sheer volume of content uploaded to social media platforms daily makes it nearly impossible for human moderators to review everything effectively. Over-reliance on automated systems, often flawed and prone to biases, further exacerbates the problem.

- Challenges include identifying subtle forms of hate speech, detecting manipulative tactics used by extremists, and removing content quickly enough to prevent its spread.

- The lack of sufficient human oversight leads to delays in removing violent content and allows harmful material to circulate for extended periods.

- Understaffing and inadequate training of content moderators contribute to inconsistent enforcement of community guidelines.

H3: The "Chilling Effect" on Free Speech:

Concerns regarding censorship and over-moderation often arise in discussions of content moderation. Finding the right balance between protecting free speech and ensuring public safety is a significant challenge.

- The removal of legitimate content due to algorithmic errors can create a "chilling effect," discouraging users from expressing their views freely.

- Potential solutions involve improving the accuracy and transparency of algorithms, providing more robust appeals processes, and increasing the involvement of human moderators in content review.

- The legal and ethical implications of content moderation require ongoing discussion and debate, focusing on developing clear guidelines that protect both free speech and public safety.

H2: Holding Tech Companies Accountable

Addressing the issue requires a multi-pronged approach, involving stronger regulations, increased transparency, and enhanced user control.

H3: Regulatory Frameworks and Legislation:

Stronger regulations and legislation are crucial to hold tech companies accountable for the role their algorithms play in facilitating violence.

- Specific legislative changes could include increased transparency in algorithm design, stricter content moderation policies, and establishing clear liability for harmful content disseminated through their platforms.

- Existing regulations, such as the Digital Services Act (DSA) in Europe, offer a starting point, but stronger and more globally consistent frameworks are needed.

- Independent audits of algorithms could help assess their potential for harm and ensure compliance with regulations.

H3: Increased Transparency and User Control:

Greater transparency in algorithm design and improved user control are essential for empowering individuals to manage their online experiences.

- Tech companies should publish regular reports detailing the design and impact of their algorithms, including metrics related to content moderation and the spread of extremist ideologies.

- Improved user-friendly privacy settings and tools allowing users to control the type of content they see can help mitigate exposure to harmful material.

- Empowering users to curate their feeds and report harmful content directly contributes to a safer online environment.

Conclusion:

Algorithms play a significant role in facilitating the spread of extremist ideologies and violence. Tech companies, through their algorithm design and content moderation practices, bear a significant responsibility in addressing this issue. We must demand greater transparency and accountability from tech companies regarding their algorithms. Contact your legislators and urge them to support legislation that holds these companies responsible for the role they play in preventing algorithms and mass shootings. The continued inaction in this area risks further escalation of online radicalization and real-world violence. The time to act decisively is now.

Featured Posts

-

Measles Outbreak Threatens Canadas Elimination Status What You Need To Know

May 30, 2025

Measles Outbreak Threatens Canadas Elimination Status What You Need To Know

May 30, 2025 -

Reforme Des Retraites Laurent Jaccobelli Evoque Une Possible Alliance Rn Gauche

May 30, 2025

Reforme Des Retraites Laurent Jaccobelli Evoque Une Possible Alliance Rn Gauche

May 30, 2025 -

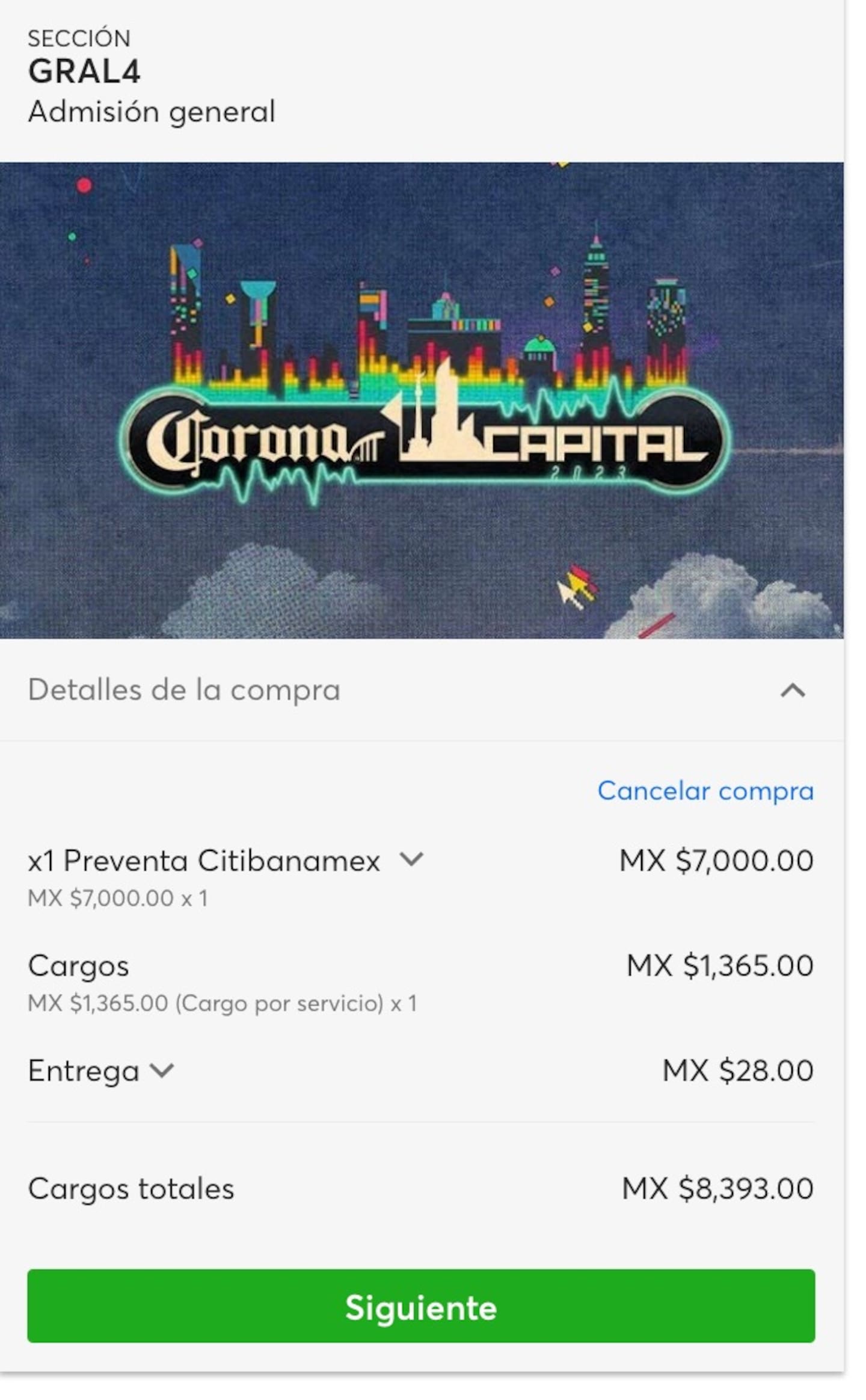

Mas Claridad Sobre Los Precios De Boletos Con Ticketmaster

May 30, 2025

Mas Claridad Sobre Los Precios De Boletos Con Ticketmaster

May 30, 2025 -

Top Seed Pegula Claims Charleston Title After Collins Battle

May 30, 2025

Top Seed Pegula Claims Charleston Title After Collins Battle

May 30, 2025 -

Jacob Alon His Path Away From Dentistry

May 30, 2025

Jacob Alon His Path Away From Dentistry

May 30, 2025