Character AI's Chatbots: Exploring The Limits Of Free Speech Protection

Table of Contents

The Allure and Accessibility of Character AI Chatbots

Character AI's rapid rise in popularity stems from its ease of use and unique features. Unlike many other AI chatbots, Character AI allows users to create and interact with diverse conversational characters, fostering a sense of interactive storytelling and creative expression. This accessibility is a key factor in its success.

- Accessibility for a broad audience: The platform's intuitive interface requires minimal technical expertise, making it accessible to a vast range of users, from casual conversationalists to serious writers and game developers.

- Ease of creating diverse conversational characters: Users can easily define and customize character personalities, leading to rich and varied interactions, further fueling creative exploration with Character AI's AI.

- Potential for creative expression and exploration: Character AI empowers users to explore different narratives, experiment with styles, and develop their creative skills within a dynamic and responsive environment. The possibilities seem limitless.

- Low barrier to entry for content creation: The ease of use significantly lowers the barrier for individuals wanting to create and share their own stories and characters, leading to a surge in user-generated content.

Content Moderation Challenges on Character AI

Moderating user-generated content within Character AI's dynamic chatbot environment presents significant challenges. The sheer volume of interactions and the creative flexibility of the platform make it difficult to detect and respond to harmful content in real-time. Identifying hate speech, misinformation, and other forms of harmful content within creative text formats requires sophisticated detection systems that understand nuance and context.

- Scale of user-generated content: The massive amount of text generated daily makes manual moderation impractical, demanding advanced AI-powered moderation tools.

- Real-time moderation difficulties: The rapid pace of interactions makes real-time detection and removal of harmful content incredibly challenging.

- Nuances of language and context: Satirical, sarcastic, or otherwise nuanced language can easily be misinterpreted by automated systems, requiring human oversight and sophisticated algorithms.

- Potential for biases in moderation algorithms: AI moderation systems can inherit biases present in their training data, leading to unfair or discriminatory content removal.

The Legal Landscape of Free Speech and AI

Existing laws concerning online content and speech, such as Section 230 of the Communications Decency Act in the US (and equivalent legislation in other countries), play a crucial role in shaping the legal landscape for platforms like Character AI. These laws generally protect online platforms from liability for user-generated content, but their application to AI-generated content is complex and evolving.

- Section 230 and its implications for Character AI: Section 230's applicability to AI-generated content is a key area of legal debate, particularly concerning the platform's responsibility for content created by its users through the AI.

- Liability for user-generated content: Determining the platform's liability for harmful content created using its AI remains a significant legal challenge.

- Challenges in defining and enforcing legal boundaries for AI: The rapid evolution of AI technology makes it difficult for lawmakers to establish clear and enforceable legal boundaries.

- International legal variations concerning online speech: Different countries have varying legal standards concerning online speech, making it challenging to establish consistent global moderation policies.

Ethical Considerations Beyond Legal Frameworks

Beyond the legal frameworks, significant ethical dilemmas surround AI-generated content and its potential societal impact. The creation of harmful or misleading content raises serious concerns about the platform's role in shaping online discourse.

- The spread of misinformation and disinformation: The ease with which users can create realistic-sounding fake news or propaganda using Character AI raises concerns about the potential for the spread of misinformation.

- The creation of harmful or offensive content: The platform's creative flexibility enables the generation of content that is hateful, discriminatory, or otherwise harmful.

- The ethical implications of AI-generated deepfakes: Character AI's ability to generate realistic text could be exploited to create convincing deepfakes, leading to reputational damage or other harms.

- The responsibility of developers and users: Both the developers of Character AI and its users bear a shared responsibility for ensuring the ethical use of the platform.

Finding a Balance: Strategies for Responsible AI Development

Balancing free speech with the need for responsible content moderation requires a multifaceted approach that involves technological advancements, improved policies, and user education.

- Improved AI detection of harmful content: Investing in more sophisticated AI algorithms that can accurately identify and flag harmful content, even within nuanced contexts, is crucial.

- Enhanced user reporting mechanisms: Providing users with easy and effective ways to report harmful content is essential for maintaining a safe online environment.

- Transparency in moderation policies: Clearly communicating moderation policies to users builds trust and promotes accountability.

- Community guidelines and user education: Educating users about responsible online behavior and establishing clear community guidelines can help foster a more positive and safe environment.

- Development of ethical guidelines for AI chatbots: Creating a framework of ethical guidelines for AI chatbot development can help guide developers and shape responsible innovation.

Conclusion

Character AI's chatbots present a fascinating and complex challenge regarding free speech protection. Balancing the platform's commitment to open expression with the need to mitigate harmful content requires careful consideration of legal frameworks, ethical implications, and technological advancements. Moving forward, a collaborative effort between developers, policymakers, and users is crucial to navigate this evolving landscape and ensure the responsible development and use of Character AI and similar technologies. We need a robust dialogue surrounding Character AI free speech to develop effective strategies for safeguarding users and upholding ethical AI development. Let's work together to ensure a future where Character AI and similar AI technologies are used responsibly and ethically.

Featured Posts

-

Zimbabwe Seal First Test Victory Over Bangladesh Muzarabanis Stellar Performance

May 23, 2025

Zimbabwe Seal First Test Victory Over Bangladesh Muzarabanis Stellar Performance

May 23, 2025 -

This Morning Cat Deeleys Dress Malfunction Before Live Show

May 23, 2025

This Morning Cat Deeleys Dress Malfunction Before Live Show

May 23, 2025 -

Distributie De Vis Pe Netflix Serialul Care Redefineste Standardele

May 23, 2025

Distributie De Vis Pe Netflix Serialul Care Redefineste Standardele

May 23, 2025 -

2025 Emmy Predictions Lead Actress In A Limited Series Contenders

May 23, 2025

2025 Emmy Predictions Lead Actress In A Limited Series Contenders

May 23, 2025 -

Planning For Memorial Day 2025 Date And Weekend Activities

May 23, 2025

Planning For Memorial Day 2025 Date And Weekend Activities

May 23, 2025

Latest Posts

-

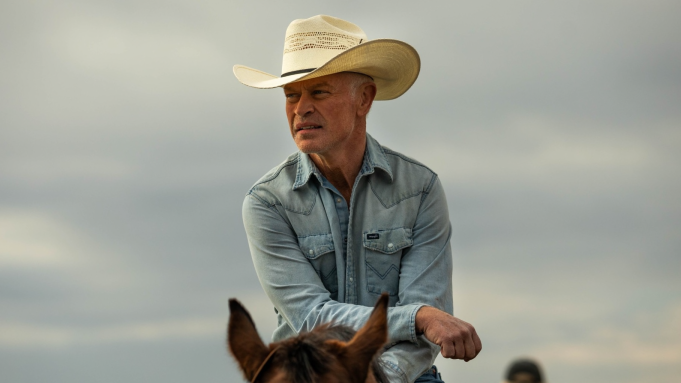

The Last Rodeo Featuring Neal Mc Donough

May 23, 2025

The Last Rodeo Featuring Neal Mc Donough

May 23, 2025 -

Neal Mc Donoughs The Last Rodeo A Western Drama

May 23, 2025

Neal Mc Donoughs The Last Rodeo A Western Drama

May 23, 2025 -

Review Neal Mc Donough In The Last Rodeo

May 23, 2025

Review Neal Mc Donough In The Last Rodeo

May 23, 2025 -

Dc Legends Of Tomorrow The Ultimate Fans Resource

May 23, 2025

Dc Legends Of Tomorrow The Ultimate Fans Resource

May 23, 2025 -

Dc Legends Of Tomorrow Tips And Tricks For Beginners

May 23, 2025

Dc Legends Of Tomorrow Tips And Tricks For Beginners

May 23, 2025