OpenAI Facing FTC Investigation: Exploring The Future Of AI Governance

Table of Contents

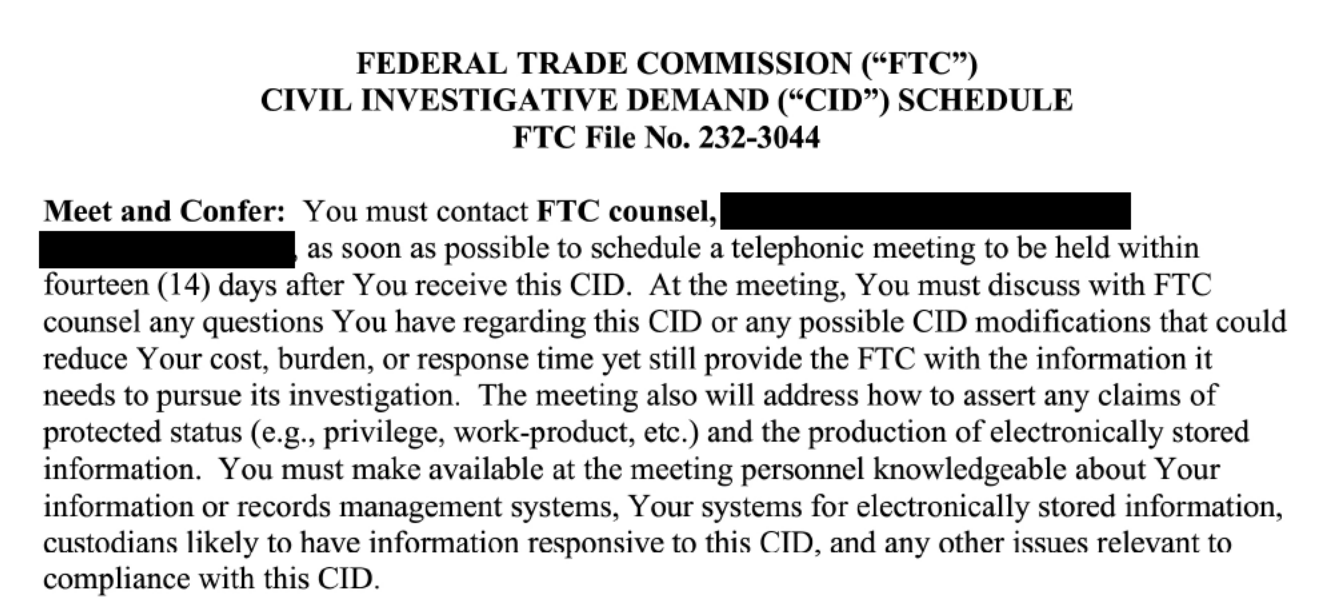

The FTC Investigation: What We Know

The FTC's investigation into OpenAI is shrouded in some secrecy, but it reportedly centers on potential violations related to data privacy, consumer protection, and AI bias within OpenAI's products, most notably its widely used GPT models. The agency is scrutinizing OpenAI's practices regarding the collection, use, and protection of personal data used to train its powerful AI models. The sheer scale of data used to train AI like GPT-4 raises significant concerns.

- Specific allegations against OpenAI: While specifics remain confidential, the investigation likely focuses on whether OpenAI adequately informed users about how their data was being used and whether appropriate safeguards were in place to prevent misuse or discriminatory outcomes. Reports suggest concerns regarding potential violations of the FTC Act.

- Potential penalties OpenAI faces: Depending on the findings, OpenAI could face substantial fines, mandatory changes to its data practices, and even restrictions on its AI development activities. The penalties could set a precedent for other AI companies.

- Timeline of the investigation: The FTC's investigation is ongoing, with no confirmed completion date. The process could take months, or even years, to fully unfold.

- Links to official FTC statements and news articles: [Insert links to relevant FTC statements and reputable news articles here as they become available].

Data Privacy Concerns in AI Development

The development of sophisticated AI models like GPT-4 relies on massive datasets, raising significant ethical and legal challenges surrounding data privacy. The sheer volume of data collected and utilized raises serious concerns about potential breaches of privacy regulations and the ethical implications of using personal information without explicit consent.

- GDPR and CCPA implications: The General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in the US impose strict requirements on how personal data is collected, processed, and protected. OpenAI's practices must comply with these and other relevant regulations worldwide.

- Informed consent and data transparency: Users should be fully informed about how their data is being used to train AI models. Transparency about the data collection process and the purposes for which the data is utilized is crucial for ensuring ethical AI development.

- Potential solutions for anonymization and data protection: Techniques like data anonymization and differential privacy can help protect user privacy while still allowing for the training of effective AI models. However, these methods aren't foolproof and require careful implementation.

- The role of differential privacy in AI: Differential privacy adds carefully calibrated noise to datasets, making it statistically difficult to identify individual data points while preserving the overall utility of the data for AI training.

Bias and Fairness in AI Systems

AI models are trained on data, and if that data reflects existing societal biases, the AI system will likely perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes in various applications, from loan applications to criminal justice.

- Examples of bias in AI systems: Biased AI systems have been shown to unfairly discriminate against certain racial or gender groups in areas like facial recognition, loan applications, and hiring processes.

- Methods for mitigating bias in AI development: Careful data curation, algorithmic fairness techniques, and ongoing monitoring and auditing are crucial for mitigating bias. This requires a multi-faceted approach.

- The importance of diverse datasets: Using diverse and representative datasets is essential for reducing bias in AI models. A balanced dataset helps ensure fair and unbiased outcomes.

- The need for ongoing monitoring and auditing: AI systems should be continuously monitored and audited to identify and address any emerging biases. Regular testing and adjustments are needed.

The Need for Robust AI Governance Frameworks

The FTC investigation highlights the urgent need for clear, comprehensive guidelines and regulations to govern the development and deployment of AI. The rapid advancement of AI necessitates a proactive approach to ensure responsible innovation and protect users.

- Arguments for stricter AI regulation: Proponents of stricter regulation argue that it's necessary to protect individuals' rights, prevent discrimination, and ensure the responsible use of AI.

- Discussion of self-regulation vs. government oversight: While self-regulation by companies has a role, robust government oversight is necessary to ensure accountability and prevent regulatory capture. A balanced approach is needed.

- Examples of existing or proposed AI regulations (EU AI Act): The EU AI Act is a landmark piece of legislation that aims to establish a comprehensive framework for regulating AI within the European Union. Other countries and regions are also developing their own AI governance frameworks.

- The role of international collaboration in AI governance: Given the global nature of AI development and deployment, international collaboration is crucial for establishing consistent and effective AI governance standards.

The Future of AI and Responsible Innovation

Navigating the ethical challenges and ensuring responsible AI development requires a multifaceted approach emphasizing transparency, accountability, and ethical considerations.

- Importance of explainable AI (XAI): Making AI systems more explainable ("explainable AI" or XAI) is crucial for understanding their decision-making processes and identifying potential biases or errors.

- The role of independent audits and certifications: Independent audits and certifications can help build trust and ensure that AI systems meet ethical and safety standards.

- The need for greater transparency in AI algorithms: Greater transparency in AI algorithms will help users understand how these systems work and build confidence in their fairness and accuracy.

- The long-term impact of AI on society and the economy: Careful consideration of the long-term societal and economic impacts of AI is critical for ensuring that its benefits are broadly shared and its risks are mitigated.

Conclusion

The FTC's investigation into OpenAI underscores the critical need for robust and effective AI governance. The development and deployment of advanced AI systems demand careful consideration of ethical implications, data privacy, and the potential for bias. Moving forward, a collaborative effort between policymakers, researchers, and industry leaders is essential to establish clear guidelines, foster responsible innovation, and ensure that AI benefits all of society. We must actively participate in the conversation surrounding AI governance to shape a future where AI is developed and used responsibly. Let's work together to ensure a future where AI enhances our lives ethically and fairly. Learn more about the ongoing developments in AI governance and contribute to the discussion.

Featured Posts

-

Updated Prediction Rio Ferdinand On Psg Vs Arsenal Champions League Final

May 09, 2025

Updated Prediction Rio Ferdinand On Psg Vs Arsenal Champions League Final

May 09, 2025 -

Divine Mercy In 1889 A Look At Religious Diversity And Gods Grace

May 09, 2025

Divine Mercy In 1889 A Look At Religious Diversity And Gods Grace

May 09, 2025 -

Nepredskazuemye Snegopady V Mae Problemy Meteorologicheskikh Prognozov

May 09, 2025

Nepredskazuemye Snegopady V Mae Problemy Meteorologicheskikh Prognozov

May 09, 2025 -

The Aoc Pirro Fact Check Debate Who Won

May 09, 2025

The Aoc Pirro Fact Check Debate Who Won

May 09, 2025 -

5 Theories On Davids Identity In High Potential Unraveling The He Morgan Brother Mystery

May 09, 2025

5 Theories On Davids Identity In High Potential Unraveling The He Morgan Brother Mystery

May 09, 2025