Responsible AI: Acknowledging And Addressing Learning Limitations

Table of Contents

Data Bias and its Impact on AI Systems

Biased datasets are a significant threat to the fairness and reliability of AI systems. When AI models are trained on data that reflects existing societal biases, they inevitably perpetuate and even amplify these biases in their outputs. This leads to unfair or discriminatory outcomes, undermining the very purpose of using AI to improve lives.

For example, facial recognition systems have been shown to be less accurate in identifying individuals with darker skin tones, leading to misidentification and potentially unjust consequences. Similarly, AI-powered loan applications might unfairly deny loans to certain demographic groups based on biased historical data.

- Lack of diversity in training data: Insufficient representation of various demographics in the datasets used to train AI models is a primary source of bias.

- Amplification of existing societal biases: AI systems can unconsciously learn and amplify pre-existing biases present in the data, leading to discriminatory results.

- Consequences of biased AI decisions: Biased AI can lead to unequal access to services, unfair judgments in legal proceedings, and other forms of discrimination.

Mitigation strategies are crucial to address data bias. These include:

- Data augmentation: Adding more data points representing underrepresented groups to balance the dataset.

- Bias detection algorithms: Employing algorithms that identify and flag potential biases within datasets before model training.

- Careful data curation: Meticulously reviewing and cleaning datasets to remove or mitigate biased information.

The Problem of Overfitting and Underfitting in Machine Learning

Overfitting and underfitting are common challenges in machine learning that directly impact the reliability and generalizability of AI models. Understanding these concepts is vital for building robust and responsible AI systems.

- Overfitting: An overfit model performs exceptionally well on the training data but poorly on new, unseen data. It has essentially memorized the training data instead of learning underlying patterns. This leads to poor performance and unreliable predictions in real-world applications.

- Underfitting: An underfit model fails to capture the underlying patterns in the data, leading to poor performance on both training and new data. It is too simplistic to accurately represent the complexities of the problem.

Both overfitting and underfitting undermine the ability of an AI system to make accurate and reliable predictions. Several techniques can help address these issues:

- Cross-validation: Dividing the dataset into multiple subsets to evaluate model performance on unseen data.

- Regularization: Adding penalty terms to the model's learning process to prevent overfitting.

- Feature selection: Carefully choosing the most relevant features to improve model performance and prevent overfitting.

Addressing Explainability and Transparency in AI

The "black box" nature of some AI models, particularly deep learning systems, raises significant concerns regarding explainability and transparency. Understanding why an AI system arrived at a particular decision is critical, especially in high-stakes applications like healthcare or finance.

- Importance of explainable AI (XAI): XAI techniques aim to make the decision-making process of AI models more transparent and understandable. This builds trust and accountability.

- Techniques for improving AI transparency: Methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) help provide insights into the factors influencing AI predictions.

- The ethical implications of “black box” AI systems: The lack of transparency can make it difficult to identify and correct biases or errors, leading to potential ethical concerns and a lack of trust.

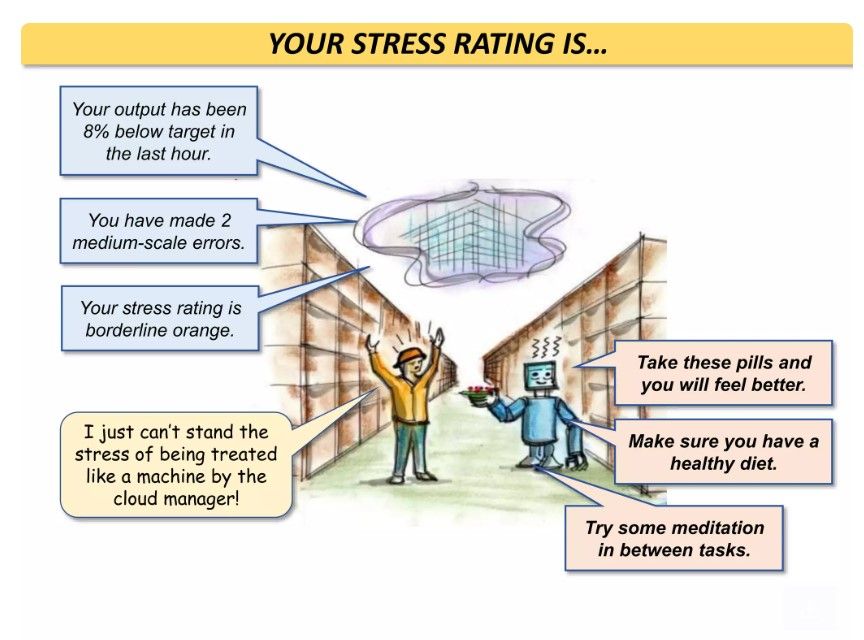

Continuous Monitoring and Evaluation of AI Systems

Responsible AI development is not a one-time effort; it requires continuous monitoring and evaluation to detect and correct errors, biases, or unexpected behaviors. Ongoing assessment is crucial for ensuring the long-term ethical and effective performance of AI systems.

- Regular performance audits and bias checks: Periodically evaluating AI systems for performance degradation and the emergence of biases.

- Feedback mechanisms for users to report issues: Creating channels for users to report problems or concerns with AI system outputs.

- Adapting AI systems to changing data and contexts: Regularly updating and retraining AI models to account for evolving data and changing environmental factors.

Conclusion: Embracing Responsible AI Development

Building Responsible AI necessitates a proactive approach to acknowledging and addressing the inherent learning limitations of AI systems. By focusing on mitigating data bias, improving model generalizability, enhancing transparency, and implementing robust monitoring strategies, we can create AI systems that are fair, reliable, and beneficial to society.

Let's work together to develop and deploy Responsible AI solutions that prioritize ethical considerations and minimize potential harms. Join the conversation and learn more about building AI systems that are both powerful and accountable. Embrace the challenge of responsible AI development and help shape a future where AI serves humanity effectively and ethically.

Featured Posts

-

Authenticating A Potential Banksy A Step By Step Guide

May 31, 2025

Authenticating A Potential Banksy A Step By Step Guide

May 31, 2025 -

Alcaraz Reaches First Monte Carlo Masters Final

May 31, 2025

Alcaraz Reaches First Monte Carlo Masters Final

May 31, 2025 -

Kham Pha Cuoc Song Va Su Nghiep Pickleball Cua Sophia Huynh Tran

May 31, 2025

Kham Pha Cuoc Song Va Su Nghiep Pickleball Cua Sophia Huynh Tran

May 31, 2025 -

Netflixs Black Mirror 5 Uncomfortably Accurate Future Predictions

May 31, 2025

Netflixs Black Mirror 5 Uncomfortably Accurate Future Predictions

May 31, 2025 -

Cleveland Gains Experienced Meteorologist From Fox19 Part Time

May 31, 2025

Cleveland Gains Experienced Meteorologist From Fox19 Part Time

May 31, 2025