Responsible AI: Addressing The Misconception Of True AI Learning

Table of Contents

The Limitations of Current AI "Learning"

Current AI systems, while impressive in their capabilities, are far from replicating the nuanced learning processes of the human brain. Their "learning" is fundamentally different, constrained by their design and the data they are trained on.

Supervised vs. Unsupervised Learning:

The dominant paradigms in AI learning are supervised and unsupervised learning. Both, however, differ significantly from human learning.

-

Supervised learning: This approach relies on massive datasets where each data point is labeled with the correct answer. The AI model learns to map inputs to outputs based on these labeled examples. For example, an image recognition system might be trained on millions of images labeled "cat" or "dog." However, this reliance on pre-existing, labeled data limits its ability to adapt to unseen scenarios or situations not explicitly represented in the training data. It cannot reason or generalize in the same way a human can.

-

Unsupervised learning: In contrast, unsupervised learning attempts to find patterns and structures in unlabeled data. This allows for discovery of hidden relationships, but it's prone to identifying spurious correlations – relationships that appear significant but lack a causal connection. This can lead to inaccurate or misleading conclusions. While seemingly more autonomous, it still lacks the contextual understanding and reasoning abilities of human learning.

-

Neither supervised nor unsupervised learning truly mimics human learning, which involves reasoning, intuition, and understanding context – capabilities that current AI lacks. This difference is crucial to understanding the limitations and potential risks of deploying AI systems without proper safeguards.

The Data Dependency Problem:

AI systems are fundamentally dependent on the data they are trained on. This dependence creates a significant challenge: the inherent biases present in many datasets.

-

Biased datasets lead to biased AI models: If the data used to train an AI system reflects existing societal biases (e.g., gender, racial, or socioeconomic biases), the resulting AI model will likely perpetuate and even amplify these biases. This can have severe consequences, leading to unfair or discriminatory outcomes.

-

The "garbage in, garbage out" principle: This adage perfectly encapsulates the data dependency problem. The quality, representativeness, and lack of bias in the training data directly impacts the performance and fairness of the AI system. Poor data quality leads to poor AI performance, potentially with harmful real-world consequences.

-

Addressing data bias: Mitigating bias requires careful data selection, augmentation techniques (e.g., synthetic data generation to balance underrepresented groups), and algorithmic adjustments to counteract biased patterns. This is an ongoing area of research and development in responsible AI.

The Lack of Generalization:

A major limitation of current AI is its struggle with generalization – the ability to apply learned knowledge to new and unseen situations.

-

Current AI models often struggle with tasks outside their training data: This makes them brittle and unreliable in real-world settings where unexpected scenarios are commonplace. A self-driving car trained only on sunny-day driving might perform poorly in snow or rain.

-

Improving generalization requires more sophisticated architectures and training methods: Researchers are actively exploring techniques like transfer learning and meta-learning to improve AI's ability to generalize. However, achieving human-level generalization remains a significant challenge.

-

The pursuit of Artificial General Intelligence (AGI): The development of AGI – AI systems with human-level intelligence and the ability to learn and adapt to a wide range of tasks – is a long-term goal. The limitations in generalization highlight the significant distance between current AI and this ambitious objective.

Building Responsible AI Systems

Addressing the limitations of current AI learning requires a focus on building responsible AI systems – systems that are ethical, transparent, and safe.

Explainable AI (XAI):

The "black box" nature of many complex AI models is a major concern. Explainable AI (XAI) aims to make the decision-making processes of these models more understandable and transparent.

-

XAI helps to build trust and accountability in AI systems: By understanding how an AI system arrives at its conclusions, we can better assess its reliability and identify potential biases or errors.

-

Techniques like LIME and SHAP provide insights into the decision-making processes: These techniques help to explain the importance of different features in an AI model's predictions, providing a level of transparency that is crucial for trust and accountability.

-

Explainability is crucial for identifying and mitigating bias and errors: Understanding why an AI system makes a particular decision is essential for detecting and correcting biases and errors, leading to fairer and more reliable outcomes.

AI Ethics and Safety:

The development and deployment of AI systems raise significant ethical concerns that demand careful consideration.

-

Consider the potential societal impacts of AI: This includes the potential for job displacement, exacerbation of existing societal inequalities, and privacy violations.

-

Develop ethical guidelines and regulations to govern the development and use of AI: Establishing clear ethical frameworks and regulations is crucial for ensuring the responsible use of AI.

-

Promote responsible innovation by prioritizing human well-being and societal benefit: AI development should always prioritize human well-being and strive to create beneficial societal outcomes.

Ensuring AI Transparency:

Transparency is crucial for building trust and accountability in AI systems. Several mechanisms can help ensure this transparency.

-

Open-source AI models and datasets promote collaboration and scrutiny: Making AI models and datasets publicly available allows for independent verification and improves the overall quality and trustworthiness of AI systems.

-

Auditing AI systems for bias and fairness is essential for responsible use: Regular audits help identify and mitigate biases, ensuring fair and equitable outcomes.

-

Regularly evaluating the performance and impact of AI systems is crucial for ongoing improvement: Continuous monitoring and evaluation are vital for identifying and addressing potential problems and ensuring that AI systems remain responsible and beneficial.

Conclusion:

This article has explored the limitations of current AI "learning" and emphasized the critical need for responsible AI development. True AI learning, as we understand it in humans, remains a distant goal. However, by focusing on transparency, explainable AI, ethical considerations, and safety, we can harness the power of AI for good, mitigating its potential risks. The future of AI hinges on our commitment to responsible innovation.

Call to Action: Let's move towards a future of responsible AI, where AI systems are developed and deployed ethically and transparently. Learn more about building responsible AI and join the conversation today. Let's work together to shape the future of AI responsibly, ensuring that AI truly serves humanity. Embrace responsible AI practices and contribute to a future where AI benefits all of society.

Featured Posts

-

Complete Guide To Nyt Mini Crossword Answers March 16 2025

May 31, 2025

Complete Guide To Nyt Mini Crossword Answers March 16 2025

May 31, 2025 -

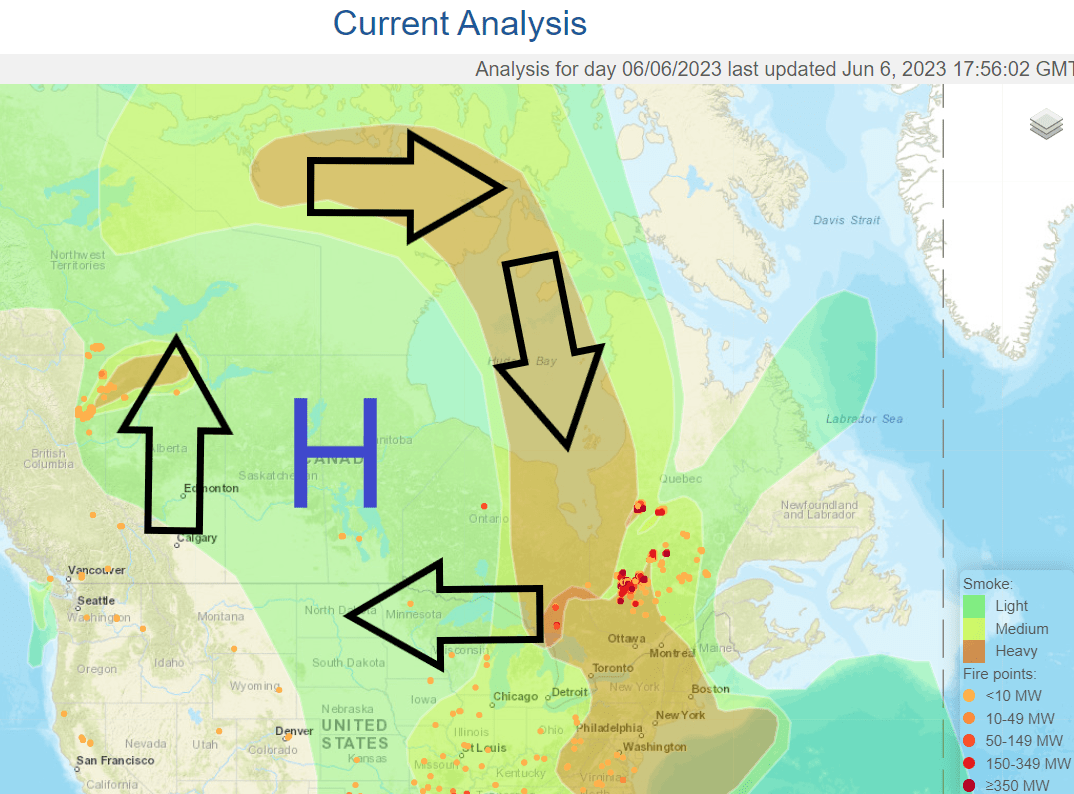

Increased Wildfire Risk Canada And Minnesota Face Early Fire Season

May 31, 2025

Increased Wildfire Risk Canada And Minnesota Face Early Fire Season

May 31, 2025 -

Economic Experts Warn Ecb Against Postponing Final Rate Cuts

May 31, 2025

Economic Experts Warn Ecb Against Postponing Final Rate Cuts

May 31, 2025 -

Over 100 Firefighters Battle East London High Street Shop Blaze

May 31, 2025

Over 100 Firefighters Battle East London High Street Shop Blaze

May 31, 2025 -

Global Increase In Covid 19 Cases The Role Of A New Variant

May 31, 2025

Global Increase In Covid 19 Cases The Role Of A New Variant

May 31, 2025