We Now Know How AI "Thinks"—and It's Barely Thinking At All

Table of Contents

The Illusion of Intelligence: How AI Mimics Thought

Statistical Pattern Recognition, Not Understanding

AI algorithms excel at identifying patterns in vast datasets. This ability fuels many impressive applications of AI, but it's crucial to understand that this pattern recognition is fundamentally different from human understanding. AI doesn't "comprehend" in the human sense; it identifies statistical probabilities.

- Image recognition: An AI can identify a cat in a picture with astonishing accuracy, but it doesn't "know" what a cat is in the way a human does. It identifies patterns of pixels that statistically correlate with the label "cat."

- Language translation: AI can translate languages with impressive fluency, but it doesn't understand the nuances of meaning or context the way a human translator would. It maps patterns of words and phrases from one language to another.

This reliance on statistical probabilities contributes to the "black box" nature of many AI systems. Their internal decision-making processes are often opaque, making it difficult to understand why an AI arrived at a particular conclusion.

The Role of Big Data in AI "Thinking"

The impressive capabilities of modern AI are inextricably linked to the availability of massive datasets. Training an AI requires feeding it enormous amounts of data so it can identify those crucial statistical patterns. However, this dependence has significant limitations:

- Bias in datasets: If the data used to train an AI is biased (reflecting societal prejudices, for example), the AI will inevitably inherit and amplify those biases in its outputs.

- Overfitting: An AI might perform exceptionally well on the data it was trained on but fail to generalize to new, unseen data. This "overfitting" limits its real-world applicability.

The reliance on big data highlights the crucial fact that AI's "thinking" is largely a reflection of the data it's been fed, not an independent process of understanding and reasoning.

Beyond Pattern Matching: The Limits of Current AI

Lack of Common Sense Reasoning

One of the most striking limitations of current AI is the absence of common sense reasoning. Humans effortlessly navigate everyday situations using implicit knowledge and intuitive understanding. AI struggles significantly with this.

- A human understands that a bird cannot fly if its wings are clipped. An AI, however, might not make this connection without explicit training data covering this specific scenario.

- Humans grasp contextual clues and easily resolve ambiguity. AI frequently fails in such situations.

The contrast between human and AI reasoning in ambiguous or unexpected scenarios starkly illustrates the difference between true understanding and sophisticated pattern matching.

The Absence of Consciousness and Self-Awareness

It's crucial to distinguish between the complex calculations performed by current AI and human consciousness. AI lacks subjective experience, feelings, and self-awareness.

- The philosophical implications of AI's lack of consciousness are profound, challenging our definitions of intelligence, sentience, and even life itself.

- The term "strong AI," referring to hypothetical conscious AI, is distinctly different from "weak AI," which describes the current state of the art – systems capable of performing specific tasks but lacking genuine understanding or self-awareness.

The Future of AI Thinking: Beyond Simple Pattern Recognition

The Need for Explainable AI (XAI)

To build trust and ensure responsible AI development, we need to move towards explainable AI (XAI). XAI aims to make the decision-making processes of AI systems transparent and understandable.

- XAI offers significant benefits: it allows for debugging, enhances accountability, and promotes trust in AI systems.

- Research in XAI is ongoing, exploring various techniques to make AI's "thinking" more interpretable.

Exploring More Sophisticated AI Architectures

Future research directions in AI explore more sophisticated architectures that might move beyond simple pattern recognition. Neuro-symbolic AI, for instance, aims to combine the strengths of statistical methods with symbolic reasoning, potentially enabling more human-like thinking.

- Advancements in neuro-symbolic AI and other approaches could lead to AI systems with enhanced reasoning abilities and a greater capacity for understanding.

- However, the development of more advanced AI systems raises important ethical considerations that require careful attention.

Conclusion

Current AI excels at pattern recognition, mimicking aspects of human intelligence in specific tasks. However, it fundamentally lacks true understanding, common sense reasoning, and consciousness. Understanding how AI "thinks"—or rather, doesn't—is crucial for responsible AI development. AI thought processes, while impressive in their capabilities, remain limited by their reliance on statistical pattern matching. AI's limitations in thinking necessitate a cautious and ethical approach to its advancement. Continue exploring the fascinating world of artificial intelligence and its limitations to gain a clearer perspective on its potential and its pitfalls. Further research into AI's limitations and the development of explainable AI are vital steps towards harnessing the power of AI responsibly.

Featured Posts

-

Louisvilles 2025 Weather Crisis Snow Tornadoes And Catastrophic Flooding

Apr 29, 2025

Louisvilles 2025 Weather Crisis Snow Tornadoes And Catastrophic Flooding

Apr 29, 2025 -

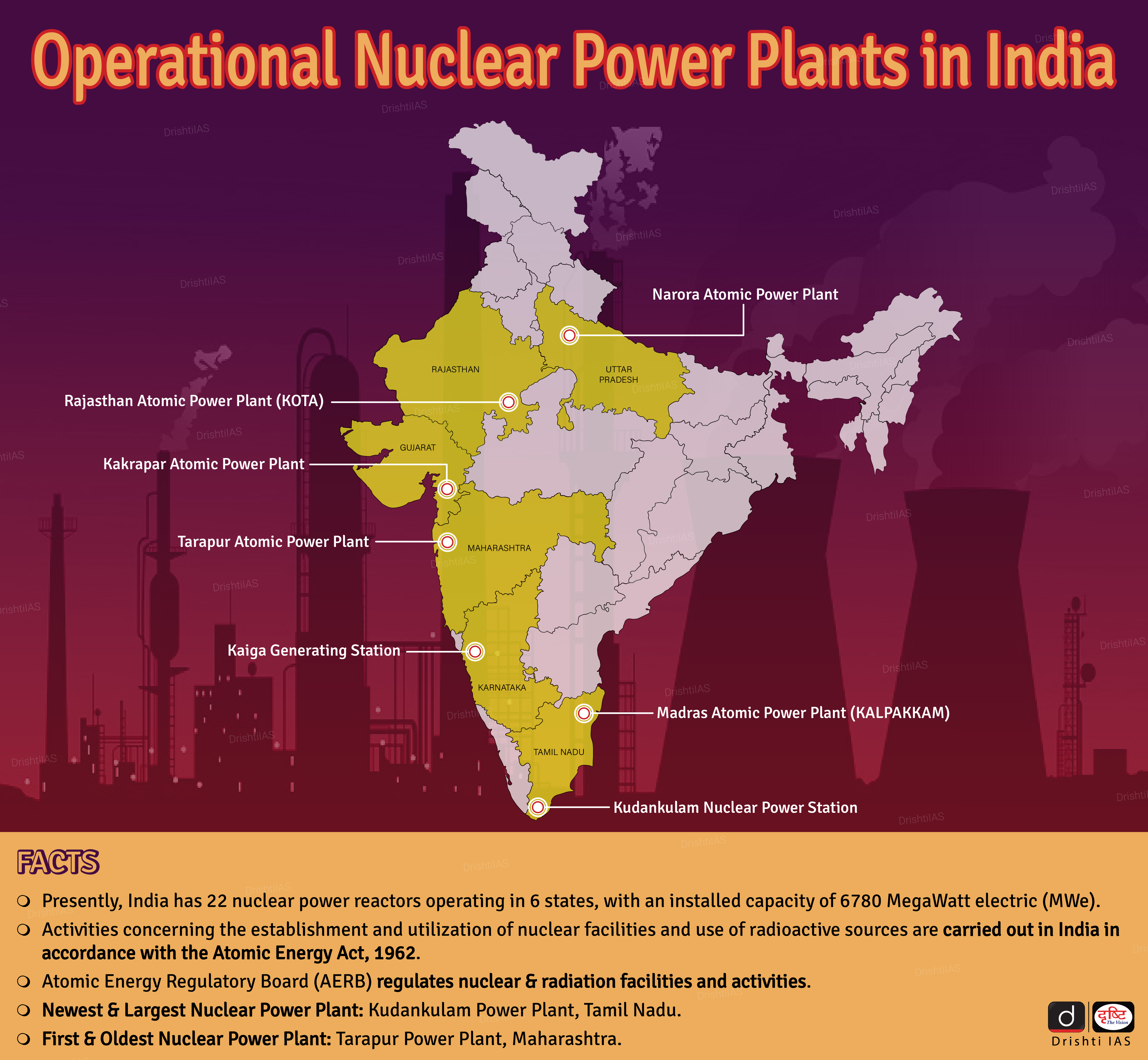

Chinas Nuclear Power Expansion 10 New Reactors Approved

Apr 29, 2025

Chinas Nuclear Power Expansion 10 New Reactors Approved

Apr 29, 2025 -

The Oh What A Beautiful World Album A Deep Dive Into Willie Nelsons Latest

Apr 29, 2025

The Oh What A Beautiful World Album A Deep Dive Into Willie Nelsons Latest

Apr 29, 2025 -

Porsche Cayenne Gts Coupe Szczegolowy Test I Opinia

Apr 29, 2025

Porsche Cayenne Gts Coupe Szczegolowy Test I Opinia

Apr 29, 2025 -

New Music Willie Nelsons Oh What A Beautiful World Featuring Rodney Crowell Duet

Apr 29, 2025

New Music Willie Nelsons Oh What A Beautiful World Featuring Rodney Crowell Duet

Apr 29, 2025