Are Tech Companies Responsible When Algorithms Radicalize Mass Shooters?

Table of Contents

The Role of Algorithms in Online Radicalization

Algorithms, the invisible forces shaping our online experiences, play a significant role in the spread of extremist ideologies. Their impact is multifaceted, creating an environment ripe for radicalization.

Echo Chambers and Filter Bubbles

Algorithms prioritize engagement, often prioritizing sensational and divisive content over factual information. This creates echo chambers and filter bubbles, reinforcing pre-existing beliefs and limiting exposure to alternative perspectives.

- Engagement over Truth: Social media platforms prioritize content that generates high engagement, regardless of its accuracy or ethical implications. This incentivizes the creation and sharing of extremist content designed to provoke strong emotional reactions.

- Personalized Content Feeds: Algorithms curate personalized feeds based on user activity, further isolating individuals within their echo chambers. The more time spent consuming extremist content, the more the algorithm reinforces this pattern.

- Algorithm Bias: Algorithmic biases can inadvertently amplify extremist voices and perspectives, creating a skewed online landscape that disproportionately exposes users to harmful ideologies.

These factors contribute to increased radicalization, leading individuals down a path toward violence. The impact of echo chambers and filter bubbles on online extremism cannot be understated.

Recommender Systems and Content Amplification

Recommender systems, designed to suggest relevant content, often inadvertently promote extremist material. These seemingly innocuous suggestions can lead users down a rabbit hole of increasingly radical content.

- Related Video/Article Suggestions: After watching one extremist video, algorithms suggest similar content, leading to a rapid escalation of exposure to radical ideologies.

- Group Recommendations: Algorithms may suggest joining extremist groups or communities, further reinforcing radical beliefs and providing a sense of belonging.

- Speed of Radicalization: The speed at which radicalization can occur online is significantly faster than in traditional offline environments, largely due to the efficiency of these recommender systems.

The ease with which extremist content is amplified through these systems presents a significant challenge to online safety.

The Difficulty of Content Moderation

Tech companies face enormous challenges in moderating the vast quantities of user-generated content on their platforms. The sheer volume of data makes effective moderation incredibly difficult.

- The Moderation Arms Race: There's a constant "arms race" between those creating extremist content and the companies trying to remove it. As moderation techniques improve, so do the methods used to circumvent them.

- Detecting Subtle Extremism: Identifying subtle forms of extremism, such as coded language or dog whistles, is challenging, requiring sophisticated AI and human review processes.

- Human Error and Bias: Human moderators, despite their best efforts, can be susceptible to errors and biases, potentially leading to inconsistent application of content moderation policies.

The limitations of current content moderation approaches highlight the urgent need for more effective strategies.

Legal and Ethical Responsibilities of Tech Companies

The question of tech company responsibility in the context of online radicalization is complex, with significant legal and ethical dimensions.

Section 230 and its Implications

Section 230 of the Communications Decency Act in the US (and similar legislation in other countries) protects online platforms from liability for user-generated content. However, its implications in the context of online radicalization and mass shootings are hotly debated.

- Arguments for Section 230: Supporters argue that Section 230 protects free speech and innovation online. Removing this protection could stifle online expression and lead to excessive censorship.

- Arguments Against Section 230: Critics argue that Section 230 shields tech companies from accountability for the harmful content on their platforms, creating a permissive environment for the spread of extremist ideologies.

- Potential Legislative Changes: The ongoing debate surrounding Section 230 suggests potential legislative changes that may alter the liability landscape for tech companies.

This legal ambiguity creates a gray area in terms of tech companies' accountability.

Ethical Considerations and Corporate Social Responsibility

Beyond legal obligations, tech companies have a strong ethical responsibility to address the issue of online radicalization.

- Proactive Measures: Tech companies should proactively implement measures to prevent the spread of extremist content, including improved algorithm design and content moderation techniques.

- Corporate Social Responsibility: Corporate social responsibility necessitates that tech companies take ownership of the impact of their platforms and actively work to mitigate the risks of online radicalization.

- Self-Regulation: While government regulation is important, self-regulation by tech companies is also crucial to ensure responsible platform governance.

The ethical considerations surrounding algorithm design and content moderation are paramount.

The Complex Relationship Between Algorithms and Violence

It's crucial to understand the complex relationship between algorithms and violence, avoiding oversimplification.

Correlation vs. Causation

While algorithms can contribute to a harmful environment, it's crucial to avoid equating correlation with causation. Algorithms do not directly cause violence.

- Multiple Contributing Factors: Mass shootings stem from a confluence of factors including mental health issues, societal inequalities, and individual agency, not solely online radicalization.

- The Role of Mental Health: Mental health is a significant factor in violent acts. Addressing mental health challenges is a critical part of any strategy to prevent mass shootings.

- Societal Factors: Societal factors such as political polarization, economic inequality, and access to weapons also play a significant role.

It is essential to consider the multitude of factors involved.

Potential Solutions and Mitigation Strategies

Several potential solutions and mitigation strategies can reduce the risk of algorithm-driven radicalization.

- Improved Content Moderation: Investing in more sophisticated AI-powered moderation techniques, coupled with improved human review processes, is essential.

- Algorithm Transparency: Greater transparency in algorithm design and functionality can help identify and address potential biases.

- User Education and Reporting: Educating users about the risks of online radicalization and providing easy-to-use reporting mechanisms is crucial.

A multi-faceted approach combining technological improvements, policy changes, and user education is vital.

Conclusion

The relationship between algorithms, online radicalization, and mass shootings is undeniably complex. While algorithms don't directly cause violence, they can create an environment conducive to extremist ideologies, amplifying harmful content and fostering echo chambers. Tech companies bear a significant responsibility – both legal and ethical – to address this issue. We need a multifaceted approach involving improved algorithm design, more effective content moderation, enhanced user education, and thoughtful policy changes to mitigate the risk of algorithms contributing to violence. The question remains: How can we ensure that the algorithms shaping our online world are not inadvertently fueling the flames of extremism and violence? The urgent need for action necessitates a collaborative effort to prevent algorithm-driven radicalization and protect society.

Featured Posts

-

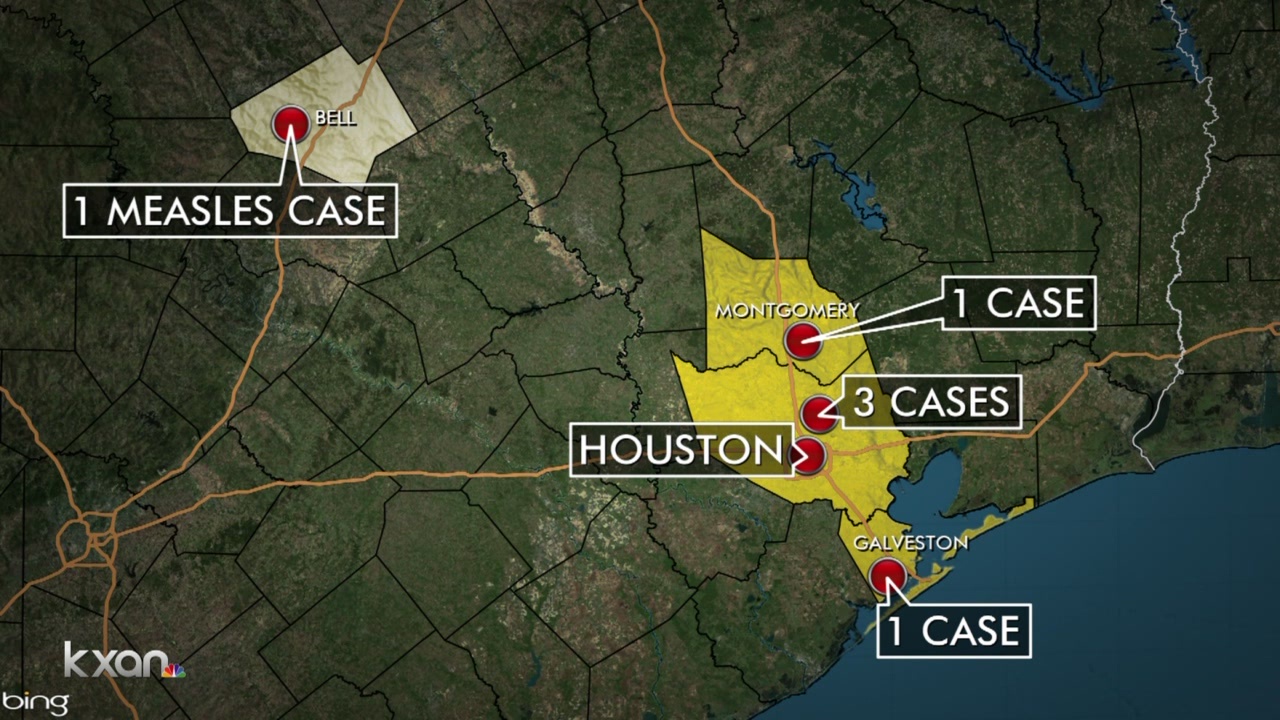

Unrelated Measles Cases On The Rise In Texas

May 30, 2025

Unrelated Measles Cases On The Rise In Texas

May 30, 2025 -

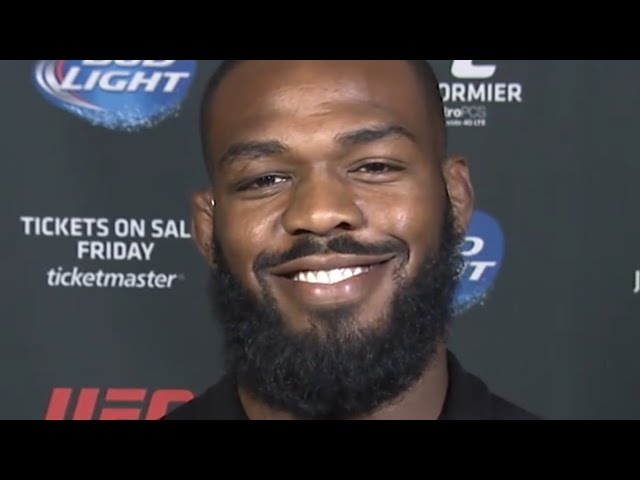

Jon Jones And Daniel Cormier An Unresolved Rivalry

May 30, 2025

Jon Jones And Daniel Cormier An Unresolved Rivalry

May 30, 2025 -

Quebec Marketing Firm Receives 330 K From Via Rail For High Speed Rail Campaign

May 30, 2025

Quebec Marketing Firm Receives 330 K From Via Rail For High Speed Rail Campaign

May 30, 2025 -

Frankenshteyn Smotrim Treyler Ot Del Toro Etu Subbotu

May 30, 2025

Frankenshteyn Smotrim Treyler Ot Del Toro Etu Subbotu

May 30, 2025 -

Glastonbury Festival Resale Chaos Fans Battle For Tickets

May 30, 2025

Glastonbury Festival Resale Chaos Fans Battle For Tickets

May 30, 2025