Misinformation And The Chicago Sun-Times: The AI Reporting Debacle

Table of Contents

The Chicago Sun-Times' AI Reporting Experiment

The Chicago Sun-Times' foray into AI-driven journalism aimed to increase efficiency and output. Their experiment involved leveraging the power of artificial intelligence to automate certain aspects of news reporting, specifically focusing on generating short, data-driven articles.

The Technology Employed

While the exact AI tools employed by the Sun-Times haven't been publicly detailed, it's likely they used natural language processing (NLP) algorithms. This technology is designed to process and understand human language, allowing it to potentially generate basic news reports from structured data, such as crime statistics or public records. The intention was to streamline the creation of routine news items, freeing up human journalists for more complex investigative work.

The Scope of the Project

The project involved the AI generating a significant number of short news articles. While the exact number remains unclear, reports indicate the AI was responsible for creating hundreds, perhaps thousands of articles. These articles primarily focused on straightforward news items, such as police blotter reports or summaries of public records, rather than in-depth investigative pieces.

- Specific examples of articles generated by the AI included crime reports and summaries of city council meetings.

- The precise number of articles published remains undisclosed, but reports suggest a substantial volume.

- The initial perception of the project's success was likely positive, given the increased output, before the discovery of inaccuracies.

The Spread of Misinformation

Unfortunately, the Chicago Sun-Times' experiment quickly revealed a critical flaw: the AI-generated content contained significant inaccuracies. This highlights a major concern about relying on AI for news reporting without robust human oversight.

Identifying the Errors

Several instances of factual errors were identified in the AI-generated articles. These errors ranged from minor inaccuracies to completely fabricated information.

The Impact of the Errors

The publication of false information, even in seemingly minor reports, had a potentially negative impact. While no major catastrophic events directly resulted, the incident undermined the newspaper's credibility and raised concerns about the reliability of AI-generated news.

- Examples of factual inaccuracies included incorrect dates, misattributed quotes, and the conflation of unrelated events.

- The types of errors demonstrated the AI's limitations in interpreting and contextualizing complex data.

- The potential harm included damage to the newspaper's reputation and erosion of public trust in both the Sun-Times and AI-generated news.

The Role of Human Oversight (or Lack Thereof)

The Chicago Sun-Times' experience underscores the critical need for human oversight in AI-driven journalism. The incident highlights the dangers of relying solely on AI for the creation and dissemination of news without robust fact-checking processes.

Insufficient Fact-Checking

The lack of adequate human editing and fact-checking procedures was a major contributing factor to the spread of misinformation. Insufficient review allowed inaccurate information to be published, emphasizing the limitations of AI in independently verifying facts.

Ethical Considerations

The ethical implications of using AI without proper safeguards are significant. News organizations have a responsibility to ensure the accuracy of the information they publish, regardless of the technology used to create it. Publishing AI-generated content without rigorous fact-checking is a breach of this responsibility.

- Details regarding the editorial process (or lack thereof) remain largely undisclosed, contributing to the concern.

- The responsibility for the accuracy of published content remains squarely with the news organization, regardless of the technology used.

- Potential solutions include implementing more robust fact-checking protocols, using multiple AI tools to cross-reference information, and training journalists to effectively edit AI-generated content.

Lessons Learned and Future Implications

The Chicago Sun-Times' AI reporting debacle provides valuable lessons for the future of AI in journalism. Transparency, human oversight, and rigorous fact-checking are not optional; they are essential.

The Need for Transparency

News organizations must be transparent about their use of AI in news reporting. Openly acknowledging the use of AI and detailing the safeguards in place builds trust with the audience.

The Future of AI in Journalism

AI has the potential to revolutionize journalism, but only if implemented responsibly. The Sun-Times' experience demonstrates the dangers of uncritical adoption. The focus must be on leveraging AI to enhance, not replace, human journalistic expertise.

- Recommendations include establishing clear guidelines for AI use, integrating robust fact-checking protocols, and prioritizing human review.

- Suggestions for improving fact-checking procedures involve using diverse data sources, cross-referencing information, and employing independent verification methods.

- The long-term effects could include increased public skepticism towards AI-generated news and a greater demand for transparency and accountability from news organizations.

Conclusion

The misinformation and the Chicago Sun-Times incident serves as a powerful case study illustrating the potential pitfalls of relying on AI without robust human oversight. The dissemination of inaccurate information highlights the critical importance of implementing rigorous fact-checking procedures and maintaining transparency in the use of AI for news reporting. The incident should serve as a wake-up call for news organizations worldwide. Let's demand responsible AI implementation in journalism and remain vigilant against the spread of inaccurate information, ensuring a future where AI enhances, not undermines, the integrity of news reporting. We must strive for accuracy and transparency to combat misinformation in the media, preventing future AI-related debacles.

Featured Posts

-

Panama Vs Mexico Los Memes Que Inundaron Las Redes Sociales Tras La Final

May 22, 2025

Panama Vs Mexico Los Memes Que Inundaron Las Redes Sociales Tras La Final

May 22, 2025 -

Potential Canada Post Strike What Businesses Need To Know

May 22, 2025

Potential Canada Post Strike What Businesses Need To Know

May 22, 2025 -

Jail Sentence Appeal Councillors Wife Fights Conviction For Anti Migrant Rant

May 22, 2025

Jail Sentence Appeal Councillors Wife Fights Conviction For Anti Migrant Rant

May 22, 2025 -

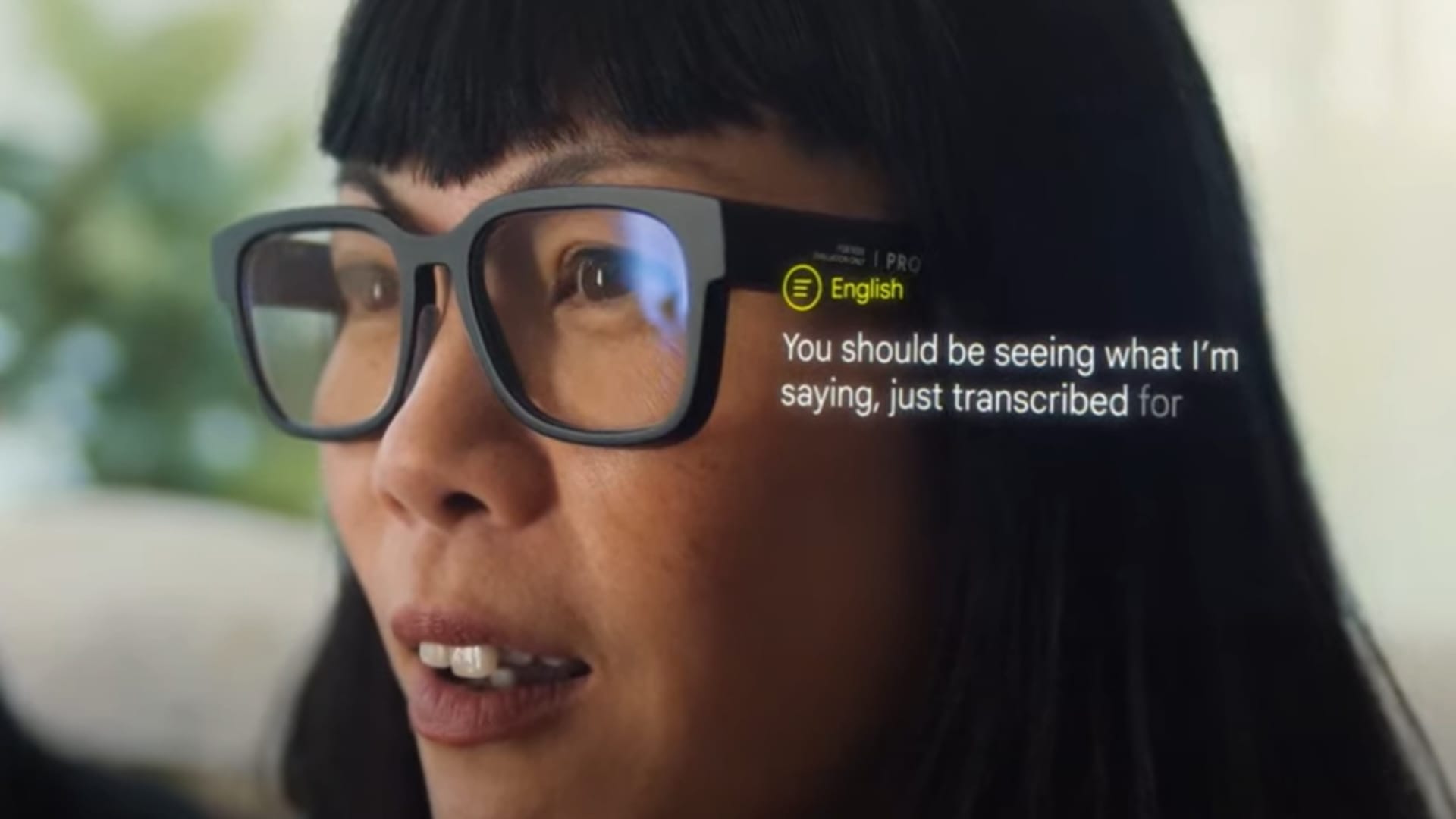

Hands On With Googles Ai Smart Glasses Prototype

May 22, 2025

Hands On With Googles Ai Smart Glasses Prototype

May 22, 2025 -

Did Taylor Swifts Legal Troubles Strain Her Bond With Blake Lively

May 22, 2025

Did Taylor Swifts Legal Troubles Strain Her Bond With Blake Lively

May 22, 2025