The Potential For Surveillance: Examining AI Therapy's Role In A Police State

Table of Contents

H2: Data Collection and Privacy Concerns in AI Therapy

AI therapy platforms promise convenient and accessible mental healthcare, but this convenience comes at a cost. The very nature of these platforms raises serious data collection and privacy concerns, especially when considering their potential use within a police state.

H3: The Scope of Data Collected

AI therapy platforms collect vast amounts of personal data, far exceeding what traditional therapy might gather. This data is incredibly sensitive and revealing of an individual's vulnerabilities, making it a powerful tool for surveillance. The scope of data collection includes:

- Detailed emotional states revealed through sentiment analysis: AI algorithms analyze tone, word choice, and even vocal inflections to gauge emotional responses, potentially revealing sensitive information about an individual's political views, social relationships, or personal anxieties.

- Personal health information (PHI) directly related to mental health conditions: This includes diagnoses, treatment plans, and details about an individual's mental health history, which can be incredibly stigmatizing if misused.

- Behavioral patterns and responses to specific stimuli: AI tracks how users respond to different prompts and therapeutic interventions, building a comprehensive profile of their psychological makeup and tendencies.

H3: Data Security and Potential Breaches

The security of this sensitive data is a major concern. Even with robust security measures, the potential for misuse by authorities is significant. Current vulnerabilities include:

- Lack of robust data encryption standards in some AI therapy platforms: Many platforms lack sufficient encryption, leaving data vulnerable to unauthorized access.

- Vulnerability to hacking and data leaks: Like any digital system, AI therapy platforms are susceptible to hacking and data breaches, potentially exposing highly sensitive personal information.

- Lack of transparency regarding data storage and usage policies: Users often lack clarity on where their data is stored, how it is used, and who has access to it. This lack of transparency exacerbates privacy concerns.

H2: AI Therapy as a Tool for State Surveillance

The data collected by AI therapy platforms can be easily weaponized in a police state, transforming a tool for mental health support into an instrument of oppression.

H3: Profiling and Predictive Policing

AI algorithms can analyze the vast datasets generated by AI therapy platforms to create detailed psychological profiles of individuals. This creates the potential for:

- Identifying individuals expressing dissent or critical views of the government: Algorithms might flag individuals expressing dissatisfaction with the government or engaging in activities deemed subversive.

- Targeting individuals based on perceived psychological vulnerabilities: Individuals deemed "unstable" or "at risk" based on their therapy data could be targeted for increased surveillance or harassment.

- Flagging individuals for increased surveillance or harassment: Profiles created from AI therapy data could be used to justify preemptive arrests or other forms of social control.

H3: Manipulation and Coercion

The intimate nature of the data collected during AI therapy sessions offers opportunities for state manipulation and coercion:

- Personalized disinformation campaigns targeting specific vulnerabilities: Governments could use insights from AI therapy data to tailor propaganda campaigns to exploit individual weaknesses and insecurities.

- Psychological manipulation to influence behavior and compliance: Data on individual responses to stimuli could be used to create highly effective psychological manipulation campaigns.

- Blackmail using sensitive information revealed during therapy sessions: Individuals could be blackmailed or coerced into compliance by threatening to expose their private thoughts and feelings.

H2: Ethical and Legal Implications of AI Therapy in Authoritarian Regimes

The ethical and legal implications of AI therapy in authoritarian regimes are profound and demand urgent attention.

H3: The Absence of Regulatory Frameworks

Many countries lack the necessary legal frameworks to protect individuals from the misuse of data collected through AI therapy. This deficiency is particularly concerning in police states where individual rights are often suppressed. Key shortcomings include:

- Inadequate data privacy laws: Existing laws often fail to address the unique privacy challenges posed by AI therapy platforms.

- Lack of oversight and accountability for AI therapy providers: There is little oversight of how AI therapy platforms collect, store, and use personal data.

- Absence of mechanisms for redress in case of data misuse: Individuals have limited recourse if their data is misused or their privacy is violated.

H3: The Erosion of Trust and Mental Healthcare Access

The fear of surveillance can have a chilling effect on individuals seeking mental healthcare. The potential for misuse of data from AI therapy platforms can:

- Reduce willingness to participate in therapy due to privacy concerns: Individuals may be hesitant to disclose sensitive information for fear of state surveillance.

- Increase social stigma surrounding mental health treatment: The fear of being labeled "unstable" or "disloyal" based on therapy data could further stigmatize mental illness.

- Diminish trust in mental health professionals: The erosion of trust in mental health professionals due to data misuse could limit access to essential services.

3. Conclusion:

The integration of AI into therapy offers considerable potential for improving mental healthcare. However, the potential for surveillance inherent in AI therapy, particularly within a police state, cannot be ignored. The collection and use of personal data raise serious ethical and legal questions. We need robust safeguards to protect individual liberties and prevent AI therapy from becoming a tool of oppression. We must advocate for stronger regulations, greater transparency, and responsible innovation to prevent the misuse of AI therapy and safeguard our fundamental rights. Let’s actively engage in the discussion surrounding the ethical implications of AI therapy to prevent the chilling reality of a police state leveraging our innermost thoughts and feelings against us. We must demand better data privacy protections and ensure that AI therapy remains a tool for healing, not a weapon of oppression.

Featured Posts

-

Jiskefet Absurdistische Humor Bekroond Met Ere Zilveren Nipkowschijf

May 16, 2025

Jiskefet Absurdistische Humor Bekroond Met Ere Zilveren Nipkowschijf

May 16, 2025 -

Earthquakes Loss To Rapids Underscores Goalkeeping Concerns Steffens Role Analyzed

May 16, 2025

Earthquakes Loss To Rapids Underscores Goalkeeping Concerns Steffens Role Analyzed

May 16, 2025 -

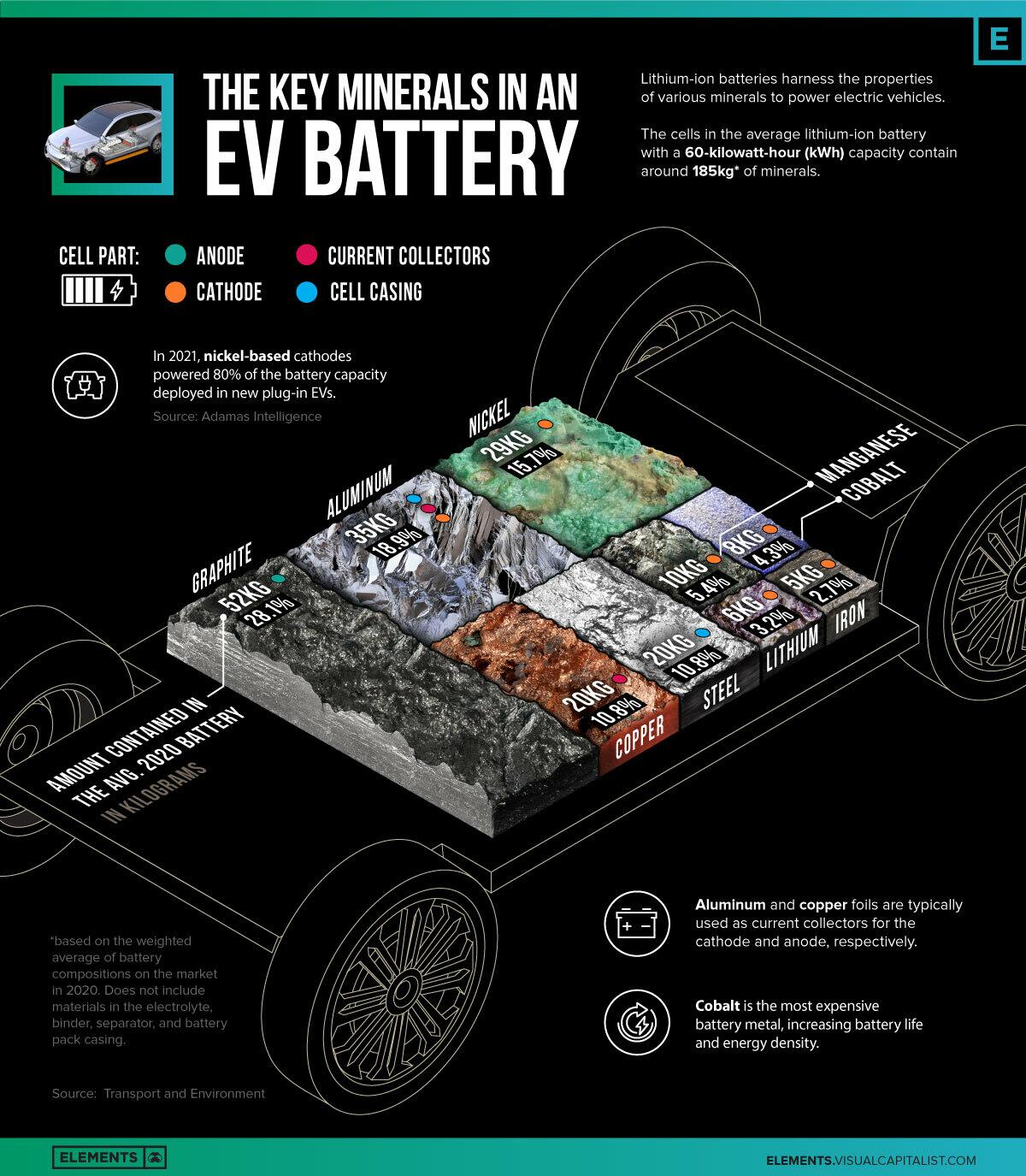

Congos Cobalt Export Ban Market Impact And The Awaiting Quota Plan

May 16, 2025

Congos Cobalt Export Ban Market Impact And The Awaiting Quota Plan

May 16, 2025 -

Partido Almeria Eldense Transmision En Directo Por La Liga Hyper Motion

May 16, 2025

Partido Almeria Eldense Transmision En Directo Por La Liga Hyper Motion

May 16, 2025 -

50 000 Fine For Anthony Edwards Nba Addresses Players Conduct

May 16, 2025

50 000 Fine For Anthony Edwards Nba Addresses Players Conduct

May 16, 2025