OpenAI Facing FTC Investigation: Concerns Over ChatGPT's Data Practices And AI Safety

Table of Contents

FTC Investigation: What are the Key Allegations?

The FTC, responsible for protecting consumers and promoting competition, launched its investigation into OpenAI to address serious concerns about the company's practices. The investigation's exact scope remains partially undisclosed, but several key allegations have emerged from news reports and expert analysis. These allegations suggest potential violations of consumer protection laws and raise significant ethical questions about the development and deployment of powerful AI models like ChatGPT.

-

Violation of consumer protection laws related to data privacy: The FTC is reportedly investigating whether OpenAI’s data collection and usage practices comply with existing consumer protection laws, particularly regarding the privacy of user data used to train the ChatGPT LLM. This includes examining the consent mechanisms employed and the transparency of data handling processes.

-

Failure to adequately address potential biases and discriminatory outputs from ChatGPT: Concerns exist that ChatGPT, trained on massive datasets, may perpetuate and even amplify existing societal biases, leading to discriminatory outputs. The FTC’s investigation is likely probing OpenAI's efforts (or lack thereof) to mitigate these biases and ensure fair and equitable outcomes.

-

Insufficient measures to mitigate the risks of misinformation and harmful content generation: ChatGPT's ability to generate realistic-sounding text raises concerns about its potential for misuse in spreading misinformation and creating harmful content. The FTC is investigating whether OpenAI has implemented adequate safeguards to prevent such outcomes.

-

Lack of transparency regarding data collection and usage practices: Critics argue that OpenAI lacks sufficient transparency regarding how it collects, uses, and protects user data. The investigation will likely scrutinize the company's data privacy policies and their clarity for users.

For further information, refer to and official FTC statements (insert link to FTC statement if available).

ChatGPT's Data Practices: Privacy Concerns at the Forefront

ChatGPT's functionality relies heavily on vast amounts of data collected from various sources, including user interactions. This data fuels the model's ability to generate human-like text, but it also raises significant privacy concerns. OpenAI's data practices are under intense scrutiny because of the potential vulnerabilities they pose.

The potential vulnerabilities and risks associated with ChatGPT's data collection include:

-

Potential for data breaches and unauthorized access: Storing and processing such large volumes of user data inevitably increases the risk of data breaches and unauthorized access, potentially leading to the exposure of sensitive personal information.

-

Concerns about the use of user data for training and improving the model: Users may not fully understand how their data is used to continuously train and improve the model, raising concerns about implicit consent and potential exploitation of user information.

-

Lack of granular control over data deletion and usage preferences: Users may lack sufficient control over their data, with limited options for deleting information or specifying how it can be used.

-

Potential for the inadvertent disclosure of sensitive personal information: The generative nature of ChatGPT poses risks that users may inadvertently reveal sensitive personal information during conversations, information that could then be incorporated into the model's training data.

These concerns must be viewed in the context of existing data protection regulations such as the GDPR (General Data Protection Regulation) in Europe and the CCPA (California Consumer Privacy Act) in the United States. OpenAI's adherence to these regulations is a central aspect of the FTC investigation.

AI Safety Concerns: Mitigating the Risks of Generative AI

Beyond data privacy, the FTC investigation touches upon the broader ethical and safety implications of generative AI models like ChatGPT. The potential for misuse and unforeseen consequences is a significant concern.

Specific safety concerns include:

-

Potential for the spread of misinformation and disinformation: The ability of ChatGPT to generate convincing but false information poses a significant threat to the spread of misinformation and disinformation, potentially influencing public opinion and even impacting democratic processes.

-

Risks associated with the generation of harmful or offensive content: ChatGPT can be used to generate hateful, abusive, or otherwise harmful content, raising concerns about the potential for online harassment, hate speech, and the spread of harmful ideologies.

-

Concerns about the potential for misuse of the technology for malicious purposes: The technology could be exploited for malicious purposes, such as generating phishing emails, creating deepfakes, or automating cyberattacks.

-

The challenge of ensuring algorithmic fairness and preventing bias: As discussed earlier, biases present in the training data can be amplified by the model, leading to unfair or discriminatory outcomes. Mitigating these biases is a significant challenge in responsible AI development.

The importance of responsible AI development and deployment cannot be overstated. OpenAI, and the broader AI industry, must prioritize the development of robust safety mechanisms and ethical guidelines to mitigate the risks associated with powerful AI models like ChatGPT.

Conclusion

The OpenAI FTC investigation underscores the critical need for increased scrutiny and responsible innovation within the AI industry. The concerns surrounding ChatGPT's data practices and AI safety highlight the potential risks associated with the widespread adoption of advanced generative AI technologies. The investigation's outcome will likely have significant implications for the future regulation of AI, influencing how companies develop, deploy, and manage their AI systems. The key takeaways are the crucial need for robust data protection measures, mechanisms to mitigate bias and harmful outputs, and enhanced transparency concerning data collection and usage.

The OpenAI FTC investigation highlights the urgent need for greater scrutiny and responsible innovation within the AI industry. Staying informed about the developments in this case and advocating for stricter regulations surrounding data privacy and AI safety is crucial. Let’s work together to ensure the ethical development and use of AI, including technologies like ChatGPT. Continue following news about the OpenAI FTC investigation for crucial updates on this evolving story.

Featured Posts

-

Cassidy Hutchinson Memoir A Look Inside The January 6th Hearings

Apr 26, 2025

Cassidy Hutchinson Memoir A Look Inside The January 6th Hearings

Apr 26, 2025 -

Us Port Fee Increases A 70 Million Loss For One Auto Carrier

Apr 26, 2025

Us Port Fee Increases A 70 Million Loss For One Auto Carrier

Apr 26, 2025 -

Safety Concerns Surrounding The Dam During Ajaxs 125th Anniversary

Apr 26, 2025

Safety Concerns Surrounding The Dam During Ajaxs 125th Anniversary

Apr 26, 2025 -

Rural School 2700 Miles From Dc Feeling The Impact Of Trumps First 100 Days

Apr 26, 2025

Rural School 2700 Miles From Dc Feeling The Impact Of Trumps First 100 Days

Apr 26, 2025 -

Pentagon Leaks And Infighting Pete Hegseth Under Pressure

Apr 26, 2025

Pentagon Leaks And Infighting Pete Hegseth Under Pressure

Apr 26, 2025

Latest Posts

-

Regulatory Changes Sought By Indian Insurers For Bond Forwards

May 10, 2025

Regulatory Changes Sought By Indian Insurers For Bond Forwards

May 10, 2025 -

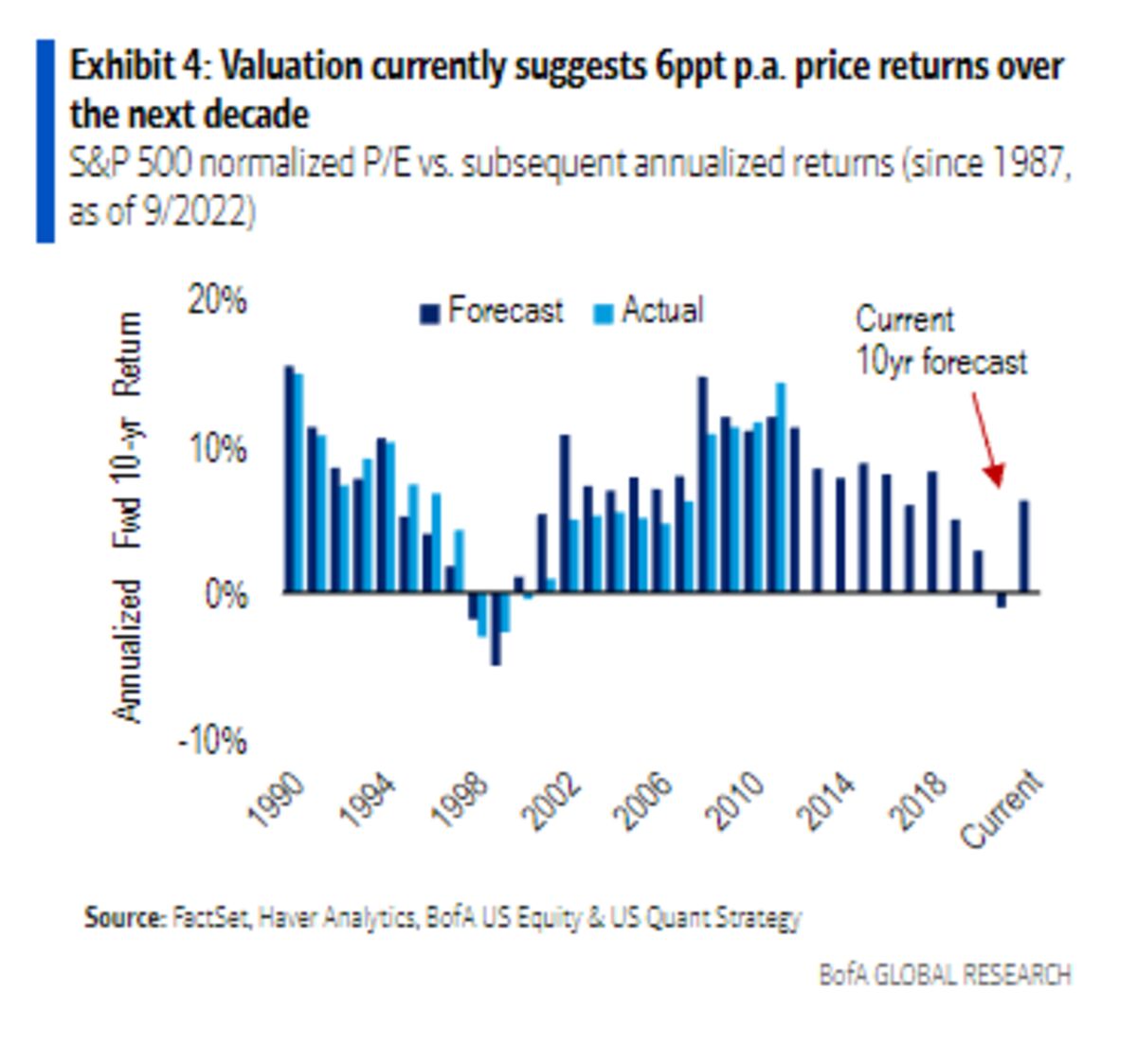

Should Investors Worry About Current Stock Market Valuations Bof As Answer

May 10, 2025

Should Investors Worry About Current Stock Market Valuations Bof As Answer

May 10, 2025 -

Indian Insurance Sector Seeks Simplification Of Bond Forward Regulations

May 10, 2025

Indian Insurance Sector Seeks Simplification Of Bond Forward Regulations

May 10, 2025 -

Call For Regulatory Reform Indian Insurers And Bond Forwards

May 10, 2025

Call For Regulatory Reform Indian Insurers And Bond Forwards

May 10, 2025 -

Indian Insurers Seek Regulatory Easing On Bond Forwards

May 10, 2025

Indian Insurers Seek Regulatory Easing On Bond Forwards

May 10, 2025